To build this mechanism I will use following azure resources.

Event Grid is a key component that acts as a main event event processor and contains:

- Topics are azure resources that represent components that generate events.

- Subscriptions/endpoint is azure resource that handles the event.

The relation between publisher components, events, topic, and subscriber/endpoint is shown in a diagram below.

Event grid contains:

- Dead Letter Queue and retry policy — if message not able to reach the Endpoint, then you should also configure retry policy

- Event filtering — the rule which allows the event grid to deliver specific event types to the endpoint point. For example: when new VM will be created in the topic container (resource group, subscription, etc), the event will be caught end delivered to the endpoint (Service buss queue, storage queue)

Queue Storage Account

is being used as a main event storage. When the event will be generated via Event Grid the final destination will be a queue.

Azure Function

Functions used as microservice, that contains logic to validate required resources and connections. Each function may contain also a database for storing configuration or state management data.

After a rather high-level architecture description I will provide more details on it below.

Architecture

As you have noticed an event grid is linked with a subscription listening on the events related to new virtual machine creation. It is necessary to add filtering here, otherwise, the event grid will generate messages whenever any resource is created in a subscription or in the target resource group. All events delivered are delivered in the storage queue. In my project I’m using three queues:

- The main queue is a destination for all messages from the event grid.

- Retry queue receives all messages which failed during the first steps of validation and were scheduled for future retry.

- Succeeded queue is used for all successfully processed messages. In my project I used this queue for future statistics and reporting.

Also a storage account is linked to Azure Log Analytics to synchronize all logs and alerts, for example, if there are more messages in the retry queue more than expected then log analytics will log this as an error alert administrator.

The next component is the Azure function app contains several azure function with validation, message processing and logic to trigger runbook.

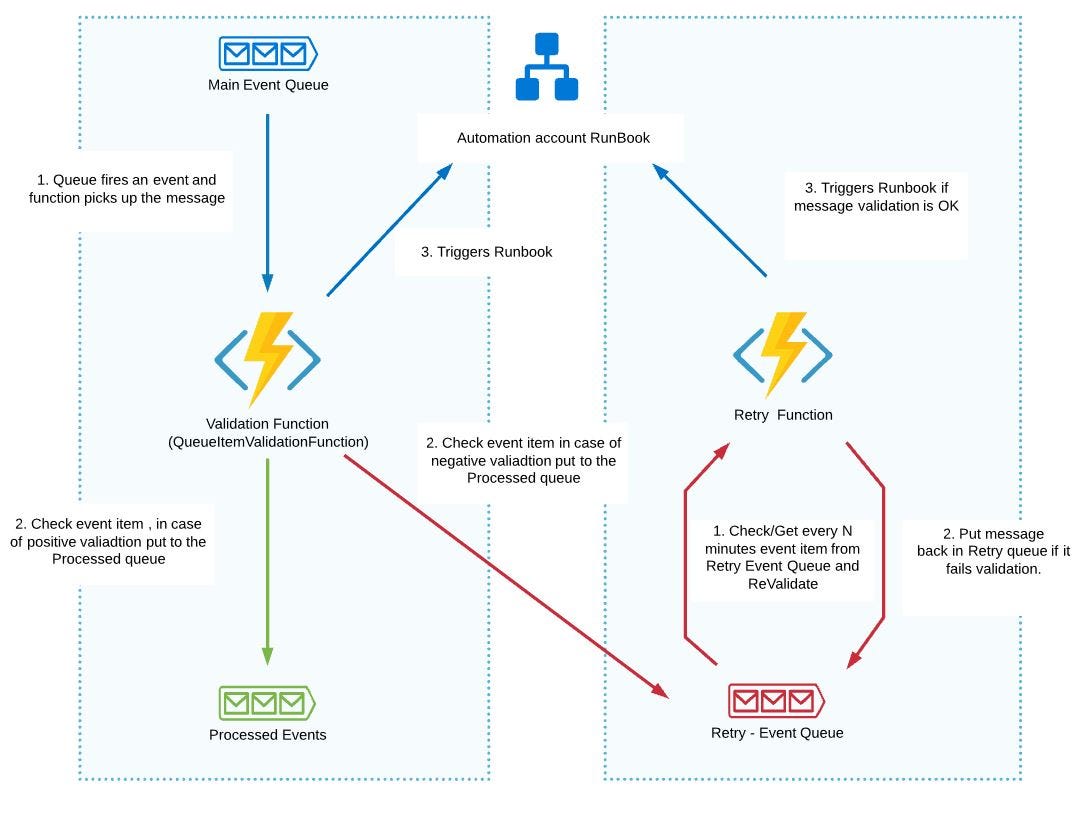

Validation Function is linked with the main event queue. When the event grid sends a message to the main queue the function is automatically triggered.

Retry Function is based on a timer trigger and will be run constantly to check failed messages which were intended to retry (in retry event queue).

API Function(HTTP trigger) is intended to trigger (re-run) the whole process from runbook, Admin UI, etc.

The whole functions workflow described below.

Architecture code base

The function app is written on PowerShell (Powershell Class) and uses principles of OOP. This approach allows us to build modular well-supported code and add or remove function at any time.

Why did I opt for PowerShell and not c# or JavaScript? The main reason is that a solution based on PS can be supported not only by developers but also by cloud administrators and system engineers.

Azure function app

I’ve placed the solution into one azure function app container, based on consumption plan, so that it does not use much resource power, and on the other hand if more CPU power or memory is required it is possible to automatically scale it up.

Each function contains one run file that represents a function and bindings configuration file where function triggers can be set up. Solution also contains common classes (modules) with code which reused across functions and operation related to cloud resources, for example, the AutomationAccountManager module contains the function to trigger runbook, etc.

Below you can see the codesmaple of the main ValidationFunction example.

As you can see I referenced Common modules in a file header, then added input bindings using param directive, therefore when new VM will be created and event data will be placed in the main queue, this function will be triggered and $QueueItem variable receives payload (Here the example of Event Grid payload) including information about VM.

Common classes/modules

- Validation module contains logic for checking a database server connection, however you can place there other validation logic. First of all it checks parameters, converts password to secure string and builds PSCredential object then setup SimplySql module if it doesn’t exist (SimplySql contains logic to establish connection and perform queries to database)

- Automation Account Manager is module that contains logic to trigger runbook, retrieves private IP of VM and of course can contain other logic related to an automation account, for example create runbook based on template, remove etc.

- Configuration retrieves configuration options from local and main settings config files, also provides logic to switch between local configuration settings and prod/test environments. I will explain more about configuration in CI/CD Architecture and pipeline section below.

- AuthorisationManager is a module that provides an access to the Azure resources. Based on Azure Managed Identities and uses OAuth2 protocol based on JWT tokens. Allows local development of the function app. To use this options, you need to obtain JWT token and add update the auth variable in the module. The MSI with AuthorisationManager will be explained in detail in CI/CD Architecture and pipeline section.

- QueueManager represents azure storage accounts queues resource and contains operations to get queue messages, add/create messages.

CI/CD Architecture and pipeline

Before I starting with explaining CI/CD architecture and pipeline let’s cover Azure Managed Identity topic.

Managed identities

Managed Identities (MSI) is service which allows your applications or functions to get access to the other azure resources. MSI based on Principal Accounts and OAuth2. In my case I need receive access to storage queue from function app.

When MSI is enabled for your resource, Azure will create Service Principal in Active Directory associated with this resource, but this principal account does not have the permission so we need assign the permission explicitly. This is will be the last step of the pipeline.

When Principal is created and required permission is assigned, MSI will work based on OAuth2 token based algorithm. Before accessing the storage queue, functions app service principal, sends credentials info to receive JWT token from AAD. Eventually, function sends this token to ADD for validation before accessing the Queue Storage. The whole process can be found on a diagram below.

The details of MSI is out of scope of this article however Here and here you can get additional information and examples on this topic.

There are two options how to implement this process in the function app based on powershell:

- Using profile.ps1 file contains few lines of code where function does this authentication. The drawback of this approach is this options not always works locally.

- Create your own class/module which implements MSI authentication process. You can see example below.

AuthenticationTokenManager performs an authentication using MSI and allows switch between local and “real” environment. For local development it requires JWT token. You can obtain it using this command az account get-access-token --resource 'https://resource.azure.net'.

CI Pipeline

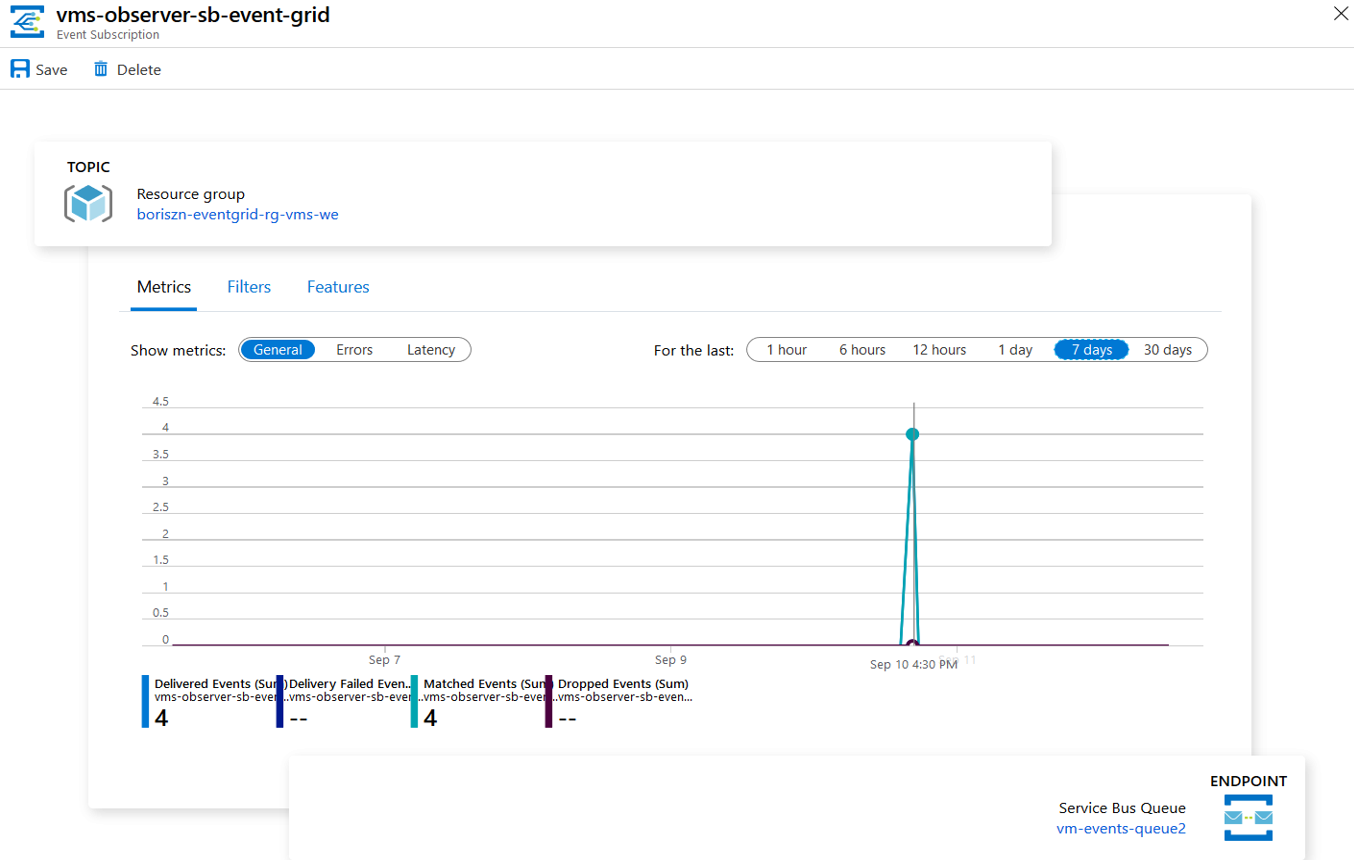

Pipeline setups all required infrastructure. First of all it creates Event Grid with topic and sets up container to observe a subscription/resource group (here you can find Event Grid sources) also it creates storage queue account with three queues for an event store. Last step of the code, configures event grid subscription filter for acting only when new VM appears:

az eventgrid event-subscription create \....--included-event-types Microsoft.Resources.ResourceWriteSuccess \--advanced-filter data.operationName StringContains 'Microsoft.Compute/virtualMachines/write'

You can also chose Azure Service Bus instead of Storage Queue, if you need advanced and more performant event storage, which supports transactions, filtering, event-forwarding, dead letter queue, topics (here the list of all advanced service bus features).

Next step is to deploy function app as a resource and enable Managed Identities (MSI).

"identity": { "type": "SystemAssigned"},

This includes deployments of storage account, required for storing project files and application logs.

After that we need to read secrets from key-vault, which is considered to be the best practice to store and retrieve secretes like master database account, storage and VMs passwords, etc., however we will skip step in the example.

Last steps is to compress (zip) function’s files source and to deploy an archive into the function app container, which we’ve created previously.

Here you can find already configured pipeline which can be imported into your Azure DevOps project.

Improvements

- For the retry function I’m using Timer Function where I’m configuring polling interval based on Cron job format (

0 */5 * * * *each 5 min) It can be replaced with Queue Trigger function with retry policy or Trigger Poling. An example of configuration is below:

{

"version": "2.0",

"extensions": {

"queues": {

"maxPollingInterval": "00:05:00",

"visibilityTimeout" : "00:00:60",

"batchSize": 16,

"maxDequeueCount": 3,

"newBatchThreshold": 5

}

}

}- Profile vs Authorization Module. Instead of using authorization module you can use profile.ps1 and authentication script section

....

if ($env:MSI_SECRET -and (Get-Module -ListAvailable Az.Accounts)) { Connect-AzAccount -Identity}

....

However this option may not work in the local environment and you should configure MSI environment variables.

- Add azure function proxies to modify URL format

Conclusion

In this article I described how to build event driven architecture to manage the virtual machine, related utilities and components.

The presented solution can be reused in following scenarios:

- Key Vaults and SSL certificate management (check certificate expiration time, log and inform, update certificate automatically)

- Create custom logic to build cloud expense reports

- Cloud resources backup, check availability and log (using Log Analytics or other tools)

- Resource clean up management

- Container management solution

No comments:

Post a Comment