KubeVirt:

KubeVirt is an upstream community project designing a virtualization API for Kubernetes for managing virtual machines in addition to containers.

KubeVirt was developed to address the needs of development teams that have adopted or want to adopt Kubernetes, but also require support for Virtual Machine-based workloads that cannot be easily containerized. More specifically, the technology provides a unified development platform where developers can build, modify, and deploy applications residing in both application containers as well as Virtual Machines in a common, shared environment.

Teams with a reliance on existing virtual machine-based workloads are empowered to containerize applications. With virtualized workloads placed directly in development workflows, teams can decompose them into microservices over time, while still leveraging their remaining virtualized components as they exist.

Service Mesh:

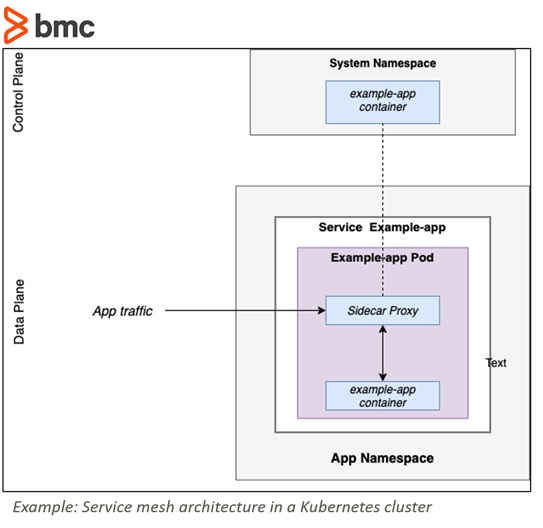

The Red Hat OpenShift Service Mesh is based on Istio. It provides behavioral insight and operational control over the service mesh, designed to provide a uniform way to connect, better secure, and monitor microservice applications.

As a service mesh grows in size and complexity, it can become harder to understand and manage. Red Hat OpenShift Service Mesh adds a transparent communication layer to existing distributed applications, without requiring any changes to the service code. You can add Red Hat OpenShift Service Mesh support to services by deploying a special sidecar proxy throughout your environment. It then intercepts network communication between each microservice. The service mesh is then configured through the control plane features.

Red Hat OpenShift Service Mesh provides a simpler way to create a network of deployed services that provides discovery, load balancing, service-to-service authentication, failure recovery, metrics, and monitoring. A service mesh also provides more complex operational functionality, including A/B testing, canary releases, rate limiting, access control, and end-to-end authentication.

Bookinfo application:

In this blog post we are going to talk about the bookinfo application, which consists of four separate microservices running on Red Hat OpenShift.

This simple application displays information about a book, similar to a single catalog entry of an online book store. Displayed on the page is a description of the book, the book’s details (ISBN, number of pages, and so on), and a few book reviews.

The four microservices used are:

Productpage: The productpage microservice calls the details and reviews microservices to populate the page.( based on Python)

Details: The details microservice contains the book information.This microservice is running on Kubevirt. ( based on Ruby)

Reviews: The reviews microservice contains the book reviews. It also calls the ratings microservice.( java based)

Ratings: The ratings microservice contains the book ranking information that accompanies a book review.

Notice that the details microservice is actually running in the KubeVirt pod.

Requirements:

This blog post is based on these versions of the following environments:

Red Hat OpenShift version 3.11

KubeVirt version 0.10

Service Mesh version 0.2

Deployment:

The following are the steps to setup this environment :

On a freshly installed OpenShift 3.11, use this link to enable Service Mesh.

After Service Mesh is up and running, use this github to deploy KubeVirt.

After KubeVirt is deployed, apply bookinfo.yaml and bookinfo-gateway.yaml from this github.

Walkthrough of the setup:

We are going to use the bookinfo sample application from the Istio webpage.

The Bookinfo application will run with a small change: the details service will run on a virtual machine inside our kubernetes cluster! Once the environment is deployed it looks like this:

Pods Details

[root@bastion ~]# oc get pods

NAME READY STATUS RESTARTS AGE

productpage-v1-57f4d6b98-gdhcf 2/2 Running 0 7h

ratings-v1-6ff8679f7b-5lxdh 2/2 Running 0 7h

reviews-v1-5b66f78dc9-j98pj 2/2 Running 0 7h

reviews-v2-5d6d58488c-7ww4t 2/2 Running 0 7h

reviews-v3-5469468cff-wjsj7 2/2 Running 0 7h

virt-launcher-vmi-details-bj9h6 3/3 Running 0 7h

Service details

[root@bastion ~]# oc get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

details ClusterIP 172.30.18.170 <none> 9080/TCP 12h

productpage ClusterIP 172.30.93.172 <none> 9080/TCP 12h

ratings ClusterIP 172.30.91.26 <none> 9080/TCP 12h

reviews ClusterIP 172.30.37.239 <none> 9080/TCP 12h

Virtual services details

[root@bastion ~]# oc get virtualservices

NAME AGE

bookinfo 12h

Gateway details

[root@bastion ~]# oc get gateway

NAME AGE

bookinfo-gateway 12h

As you can see above, “virt-launcher-vmi-details” is a KubeVirt VM pod running the details microservice!

This pod consist of following containers:

Volumeregistryvolume -- this container provides the persistent storage for the VM and the initial QEMU image

Compute -- this container represent the virtualization layer running the VM instance

Istio-proxy -- this is the sidecar Envoy proxy used by Istio to enforce policies

From Qemu perspective , virtual machine in this case will looks like as shown below .

[root@node2 ~]# ps -eaf | grep qemu

root 17147 17131 0 15:41 ? 00:00:00 /usr/bin/virt-launcher --qemu-timeout 5m --name vmi-details --uid 7176552f-02db-11e9-b39a-06a5cb0d3fce --namespace test --kubevirt-share-dir /var/run/kubevirt --ephemeral-disk-dir /var/run/kubevirt-ephemeral-disks --readiness-file /tmp/healthy --grace-period-seconds 15 --hook-sidecars 0 --use-emulation

root 17580 17147 0 15:42 ? 00:00:01 /usr/bin/virt-launcher --qemu-timeout 5m --name vmi-details --uid 7176552f-02db-11e9-b39a-06a5cb0d3fce --namespace test --kubevirt-share-dir /var/run/kubevirt --ephemeral-disk-dir /var/run/kubevirt-ephemeral-disks --readiness-file /tmp/healthy --grace-period-seconds 15 --hook-sidecars 0 --use-emulation --no-fork true

107 18025 17147 30 15:42 ? 00:18:26 /usr/bin/qemu-system-x86_64 -name guest=test_vmi-details <snip>user,id=testSlirp,net=10.0.2.0/24,dnssearch=test.svc.cluster.local,dnssearch=svc.cluster.local,dnssearch=cluster.local,dnssearch=ec2.internal,dnssearch=2306.internal,hostfwd=tcp::9080-:9080 -device e1000,netdev=testSlirp,id=testSlirp -msg timestamp=on

To have a look under the hood we can look at the iptables entries injected by kube-proxy at the node executing the Pod running the VM.

[root@node2 ~]# iptables-save | grep -i details

-A KUBE-SEP-GRSCMVBAWU6K5QZC -s 10.1.10.123/32 -m comment --comment "test/details:http" -j KUBE-MARK-MASQ

-A KUBE-SEP-GRSCMVBAWU6K5QZC -p tcp -m comment --comment "test/details:http" -m tcp -j DNAT --to-destination 10.1.10.123:9080

-A KUBE-SERVICES -d 172.30.46.76/32 -p tcp -m comment --comment "test/details:http cluster IP" -m tcp --dport 9080 -j KUBE-SVC-U5GFLZHENRTXEBQ7

-A KUBE-SVC-U5GFLZHENRTXEBQ7 -m comment --comment "test/details:http" -j KUBE-SEP-GRSCMVBAWU6K5QZC

From the following output we can see that in our lab the KubeVirt Pod is running with IP 10.1.10.123.

[root@bastion ~]# oc get pods virt-launcher-vmi-details-pb7fg -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

virt-launcher-vmi-details-pb7fg 3/3 Running 0 16m 10.1.10.123 node2.2306.internal <none>

From the iptables output we can see how kube-proxy create the masquerade rules to redirect the traffic to the corresponding Kubernetes service details which in our lab has the Cluster IP 172.30.46.76. This is identical to how Kubernetes handles the routes for any other service defined in the cluster.

[root@node2 ~]# iptables-save | grep -i details

-A KUBE-SEP-GRSCMVBAWU6K5QZC -s 10.1.10.123/32 -m comment --comment "test/details:http" -j KUBE-MARK-MASQ

-A KUBE-SEP-GRSCMVBAWU6K5QZC -p tcp -m comment --comment "test/details:http" -m tcp -j DNAT --to-destination 10.1.10.123:9080

-A KUBE-SERVICES -d 172.30.46.76/32 -p tcp -m comment --comment "test/details:http cluster IP" -m tcp --dport 9080 -j KUBE-SVC-U5GFLZHENRTXEBQ7

-A KUBE-SVC-U5GFLZHENRTXEBQ7 -m comment --comment "test/details:http" -j KUBE-SEP-GRSCMVBAWU6K5QZC

[root@node2 ~]#

Looking at the details service definition we can see all the standard Kubernetes constructs are still in use. In this particular service definition we are exposing TCP 9080 over the Cluster IP.

[root@bastion ~]# oc get svc details -o yaml

apiVersion: v1

kind: Service

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"labels":{"app":"details"},"name":"details","namespace":"test"},"spec":{"ports":[{"name":"http","port":9080}],"selector":{"app":"details"}}}

creationTimestamp: 2018-12-18T15:41:53Z

labels:

app: details

name: details

namespace: test

resourceVersion: "1884449"

selfLink: /api/v1/namespaces/test/services/details

uid: 717339bc-02db-11e9-b39a-06a5cb0d3fce

spec:

clusterIP: 172.30.46.76

ports:

- name: http

port: 9080

protocol: TCP

targetPort: 9080

selector:

app: details

sessionAffinity: None

type: ClusterIP

status:

loadBalancer: {}

[root@bastion ~]#

Taking a closer look into the actual KubeVirt Pod definition we have a lot more details around what we saw before over the GUI:

We have a Pod named virt-launcher-vmi-details-pb7fg

This Pod contains the containers: volumeregistryvolume (the volumes and QEMU image), compute (the instance of the QEMU image with the volumes), istio-proxy (the Isto sidecar) and istio-init. This last one is only used during the instantiation of the istio-proxy to inject its configuration.

[root@bastion ~]# oc get pods virt-launcher-vmi-details-pb7fg -o yaml

apiVersion: v1

kind: Pod

metadata:

<snip>

name: virt-launcher-vmi-details-pb7fg

<snip>

kind: VirtualMachineInstance

<snip>

containers:

<snip>

image: kubevirt/fedora-cloud-registry-disk-demo:latest

imagePullPolicy: IfNotPresent

name: volumeregistryvolume

<snip>

volumeMounts:

- mountPath: /var/run/kubevirt-ephemeral-disks

name: ephemeral-disks

<snip>

image: docker.io/kubevirt/virt-launcher:v0.10.0

imagePullPolicy: IfNotPresent

name: compute

ports:

- containerPort: 9080

name: http

protocol: TCP

<snip>

- args:

- proxy

- sidecar

- --configPath

- /etc/istio/proxy

- --binaryPath

- /usr/local/bin/envoy

- --serviceCluster

- details

- --drainDuration

- 45s

- --parentShutdownDuration

- 1m0s

- --discoveryAddress

- istio-pilot.istio-system:15005

- --discoveryRefreshDelay

- 1s

- --zipkinAddress

- zipkin.istio-system:9411

- --connectTimeout

- 10s

- --statsdUdpAddress

- istio-statsd-prom-bridge.istio-system:9125

- --proxyAdminPort

- "15000"

- --controlPlaneAuthPolicy

- MUTUAL_TLS

<snip>

image: openshift-istio-tech-preview/proxyv2:0.2.0

imagePullPolicy: IfNotPresent

name: istio-proxy

<snip>

image: openshift-istio-tech-preview/proxy-init:0.2.0

imagePullPolicy: IfNotPresent

name: istio-init

resources: {}

securityContext:

capabilities:

add:

- NET_ADMIN

privileged: true

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

<snip>

hostIP: 192.199.0.24

<snip>

podIP: 10.1.10.123

qosClass: Burstable

[root@bastion ~]#

Now let's take a closer look in the virtual machine part inside the bookinfo.yaml file. The following section creates an interface of type slirp and connect it to the pod network by matching the pod network name and add our application port.

It is to be noted that in real production scenario we do not recommend to use slirp interface because it is inherently slow. Instead we prefer to use masquerade interface (placing the VM behind NAT inside the Pod) with this PR.

The main problem from networking perspective to use istio with kubevirt is that in order to communicate with the qemu process, we create new network interfaces on the Pod network namespace (Linux bridge and tap device). These interfaces are affected by iptables rules (PREROUTING table that send the inbound traffic to the envoy process). This problem can be addressed by this PR.

interfaces:

- name: testSlirp

slirp: {}

ports:

- name: http

port: 9080

And following cloud init will download install and run the details application.

- cloudInitNoCloud:

userData: |-

#!/bin/bash

echo "fedora" |passwd fedora --stdin

yum install git ruby -y

git clone https://github.com/istio/istio.git

cd istio/samples/bookinfo/src/details/

ruby details.rb 9080 &

It is to be noted that our environment is configured to run the services in an Istio-enabled environment, with Envoy sidecars injected alongside each service. The following policy in the istio-sidecar-injector configmap will ensure that sidecar injection happens by default

[root@bastion ~]# oc -n istio-system edit configmap istio-sidecar-injector

Please edit the object below. Lines beginning with a '#' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this file will be

# reopened with the relevant failures.

#

apiVersion: v1

data:

config: "policy: enabled\ntemplate: |-\n initContainers:\n - name: istio-init\n

And the lines below, when added to the istio-sidecar-injector configmap will ensure that the cloud init will install the required rpms from the external repo i.e. https://github.com/istio/istio.git.

\"traffic.sidecar.istio.io/includeOutboundIPRanges\" ]]\"\n [[ else -]]\n

\ - \"172.30.0.0/16,10.1.0.0/16\"\n

-------

It is to be noted that all of the microservices will be packaged with an Envoy sidecar that intercepts incoming and outgoing calls for the service, providing the hooks needed to externally control--via the Istio control plane--routing, telemetry collection, and policy enforcement for the application as a whole.

Finally, the Bookinfo sample application will run, as shown below.

Distributed tracing:

Since Service Mesh is installed in our setup, lets see how monitoring and troubleshooting of microservices is possible with Jaeger.

Jaeger:

Jaeger is an open source distributed tracing system. You use Jaeger for monitoring and troubleshooting microservices-based distributed systems. Using Jaeger you can perform a trace, which follows the path of a request through various microservices that make up an application. Jaeger is installed by default as part of the Service Mesh.

Below is an example of how the details microservice will look like on Jaeger in terms of traceability and monitoring.

With OpenShift, your cloud, your way

Hybrid use cases of using virtual machine in container can bring a lot of flexibility and advantages over traditional approach of using Container platform in conjunction with virtualization platform . Using Service mesh can reduce complexity of joint solution . The main benefit of using microservices is that it allows us to decompose an application into different smaller services and hence it improves modularity.

By standardizing on OpenShift, you’ve also laid a foundation for entire stack along with service management and security, as well as simplified application procurement and deployment, across clouds and on-prem. Stay tuned in the coming months for more about Kube-virt use cases along with Service mesh, microservices, and OpenShift Container Platform.