What’s the buzz all about ? Many in the industry see it as a game changer. I will share my experience with Copilot for Azure today (not to be confused with other Copilots, such as GitHub Copilot, Windows Copilot, Dynamics 365 Copilot, Microsoft 365 Copilot etc.).

At the time of writing this article, Microsoft Copilot for Azure is in public preview phase. This morning, I received a much awaited email stating : Welcome to the limited public preview of Microsoft Copilot for Azure! Congratulations! It seemed, I was the chosen one. No pun intended, I was. After all, it holds a promise to bring a paradigm shift in simplifying cloud operations. A promise which is close to my heart: pretty much the new way of working.

It also validates my own prediction on Horizon5 (H5) in my article Next Quarter Century of GenAI. H5 is about Executable AI evolution from Generative AI.

I will divide this article into two sections, Theory and Practical hands-on.

Section 1: Theory

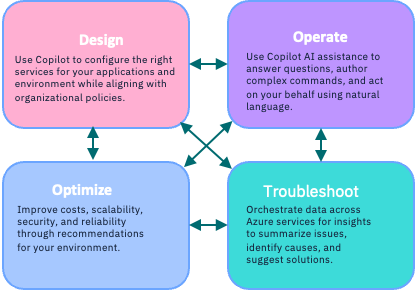

On November 15th 2023, Microsoft announced Microsoft Copilot for Azure, an AI companion, that helps you design, operate, optimize, and troubleshoot your cloud infrastructure and services. Combining the power of cutting-edge large language models (LLMs) with the Azure Resource Model, Copilot for Azure enables rich understanding and management of everything that’s happening in Azure, from the cloud to the edge.

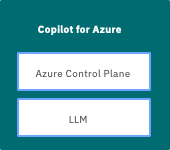

Azure users can gain new insights into their workloads, unlock untapped Azure functionality and orchestrate tasks across both cloud and edge. It leverages Large Language Models (LLMs), the Azure control plane and insights about a user’s Azure and Arc-enabled assets. All of this is carried out within the framework of Azure’s steadfast commitment to safeguarding the customer’s data security and privacy.

Let me provide a summary.

- Leverages large language model (LLM) and Azure control plane

- Answers questions about your Azure managed environment

- Generates queries for your Azure managed environment

- Performs tasks and safely acts on your behalf for your managed environment

- Makes high-quality recommendations and takes actions within your organization’s policy and privacy

So, with this, you can understand your Azure environment, work smarter with your Azure services, write and optimize code for your applications on Azure.

Section 2: Practical hands-on

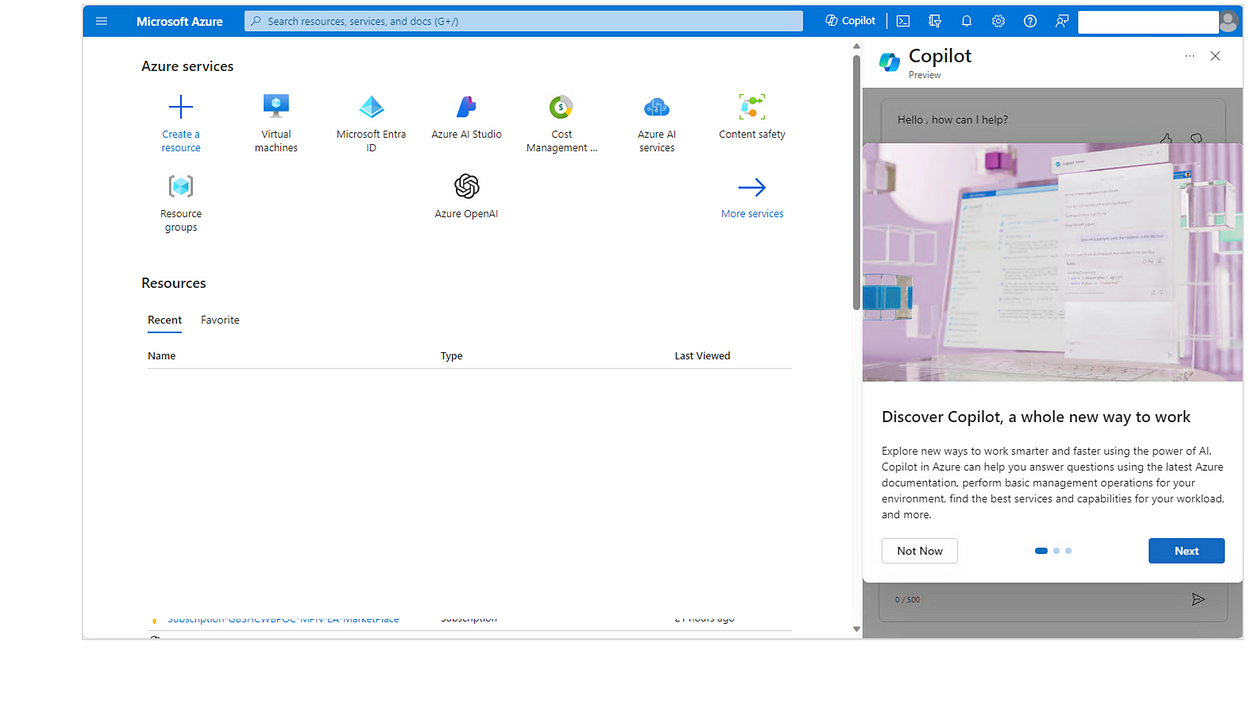

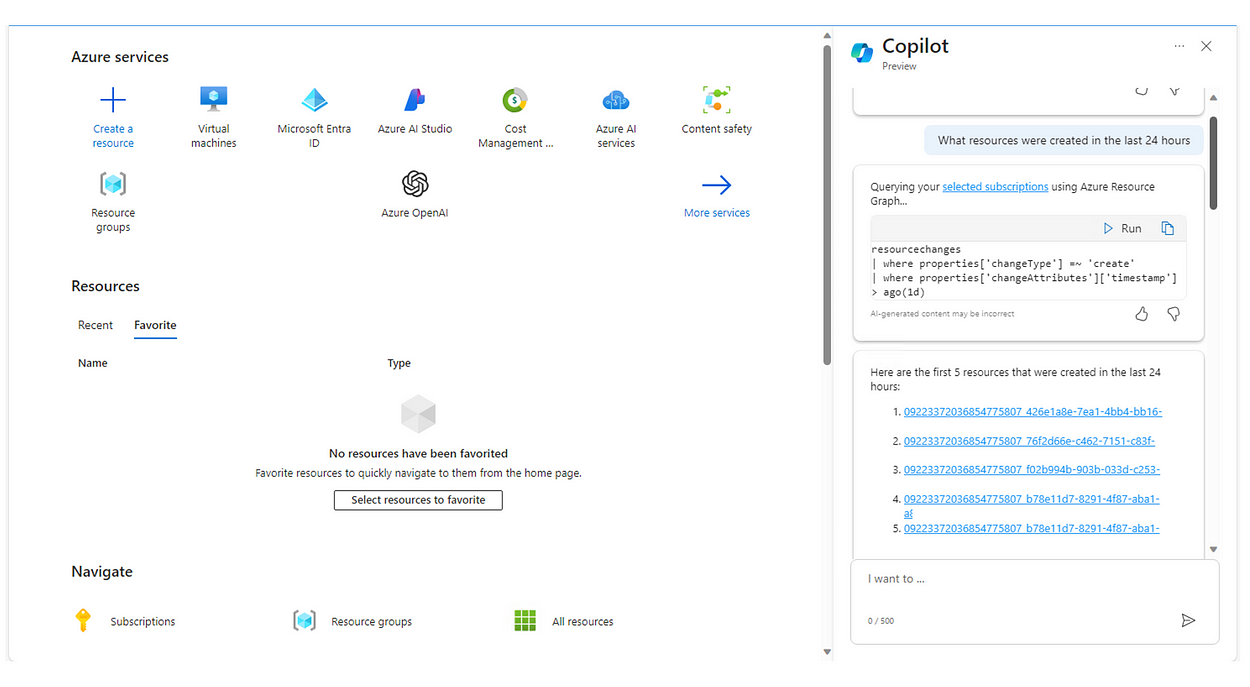

I logged into Azure console. Copilot icon appeared at the top bar for me to happily click on it (and I did just that). It is provided as a sidebar on the portal itself, so as you navigate the portal services, Copilot stays with you and understands the context from your browsing as well.

To start with, I asked how many resources are running (for me). It replied accurately right there — 184 resources.

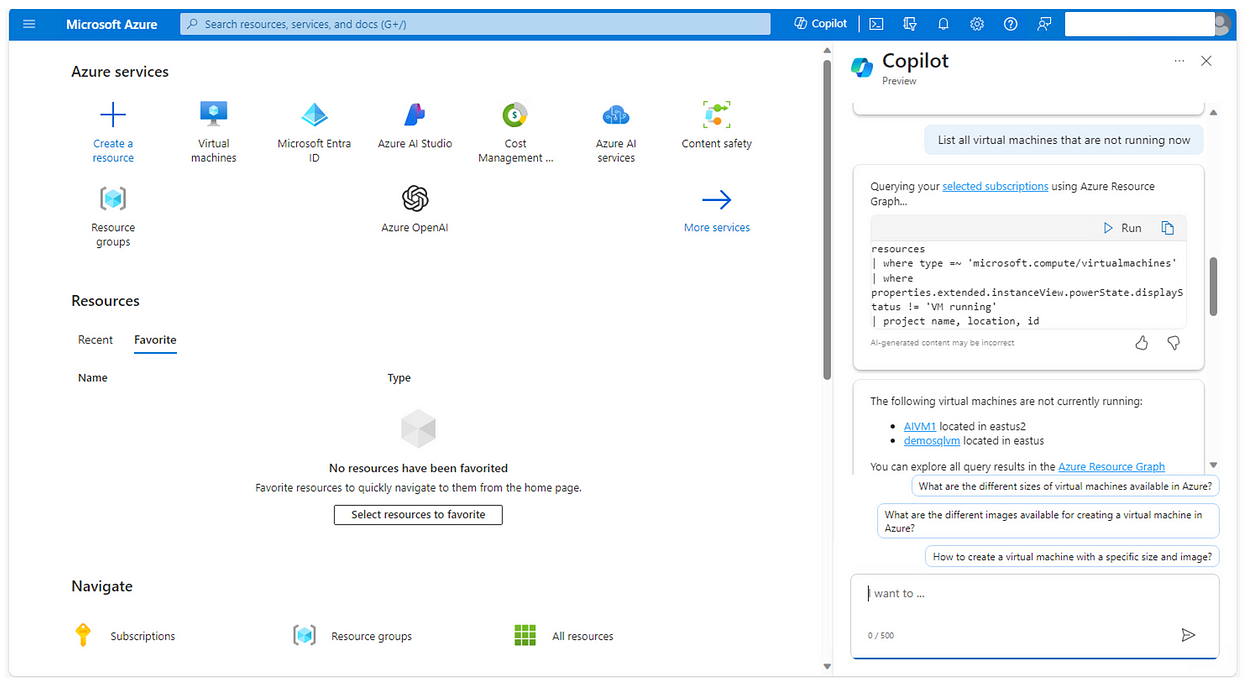

Further, I asked Copilot to list all VMs that are not running now as shown in Fig. 3. It understood the context within my resources in the subscription (tenant id).

Next, I asked which resources were created in the last 24 hours and it showed up those as shown in Fig. 4.

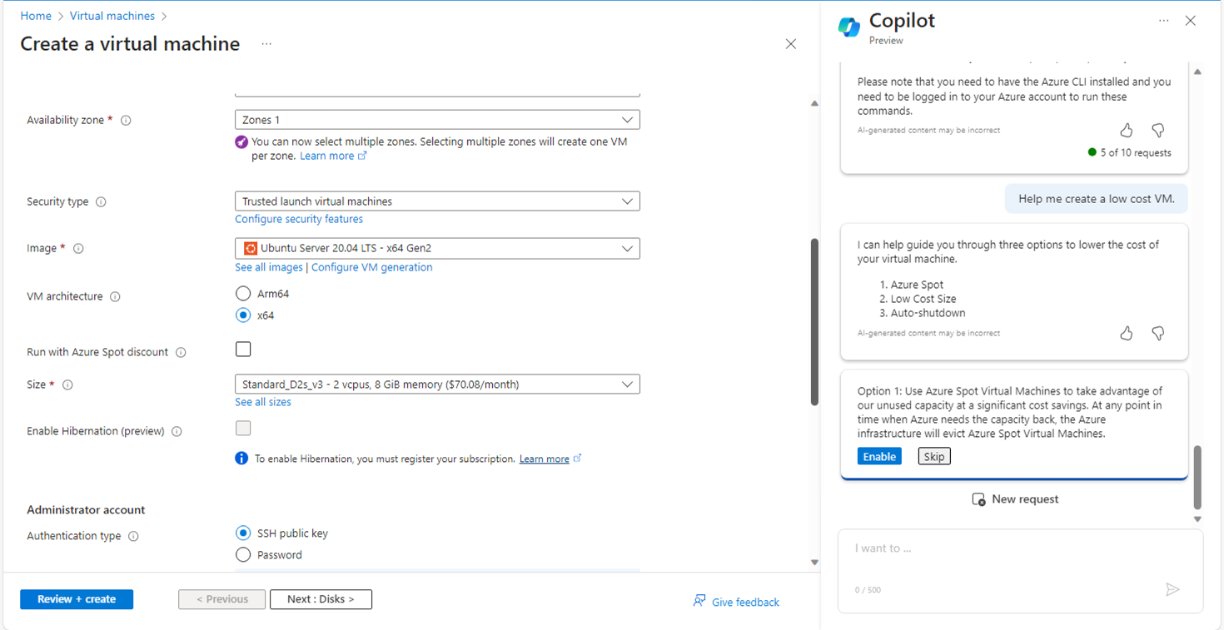

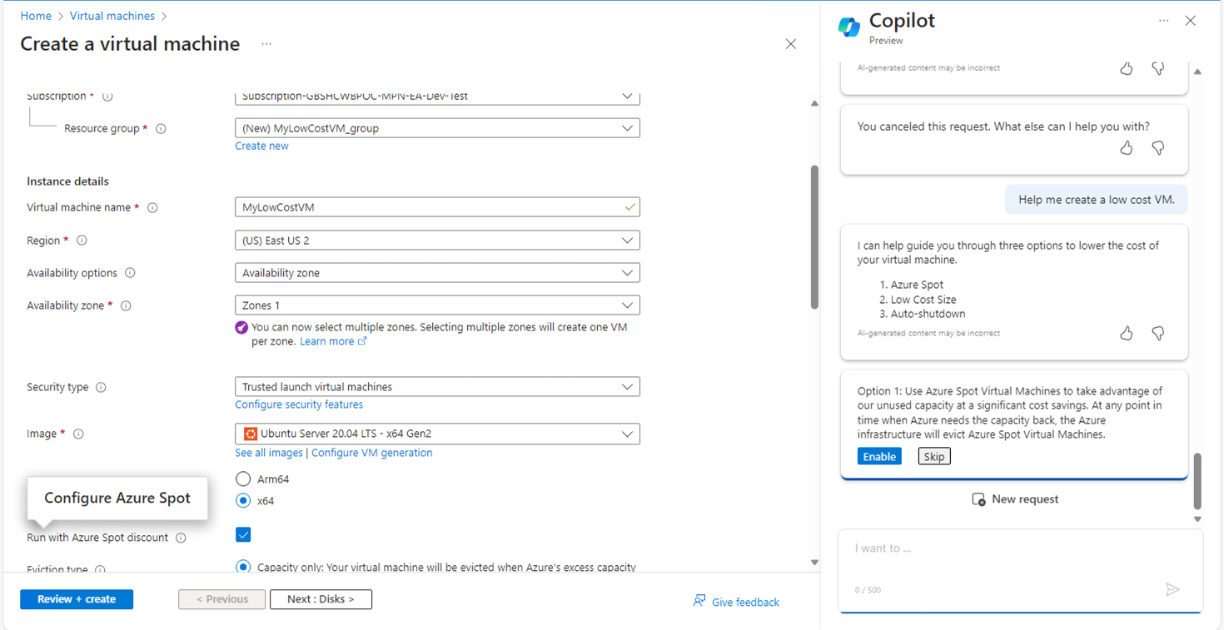

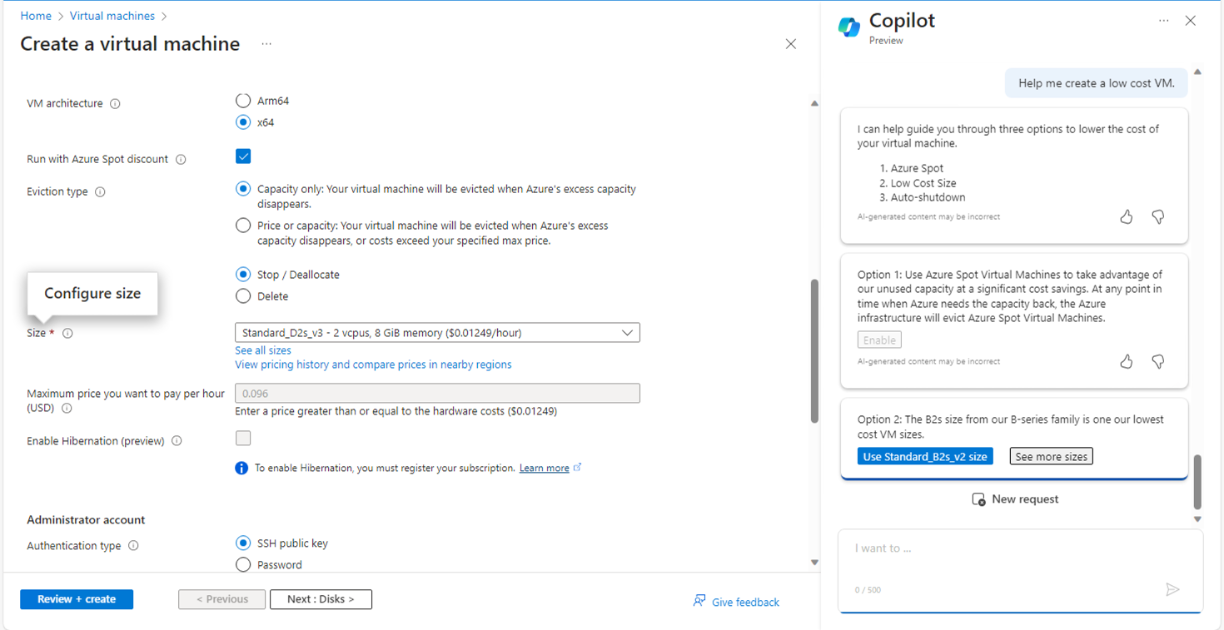

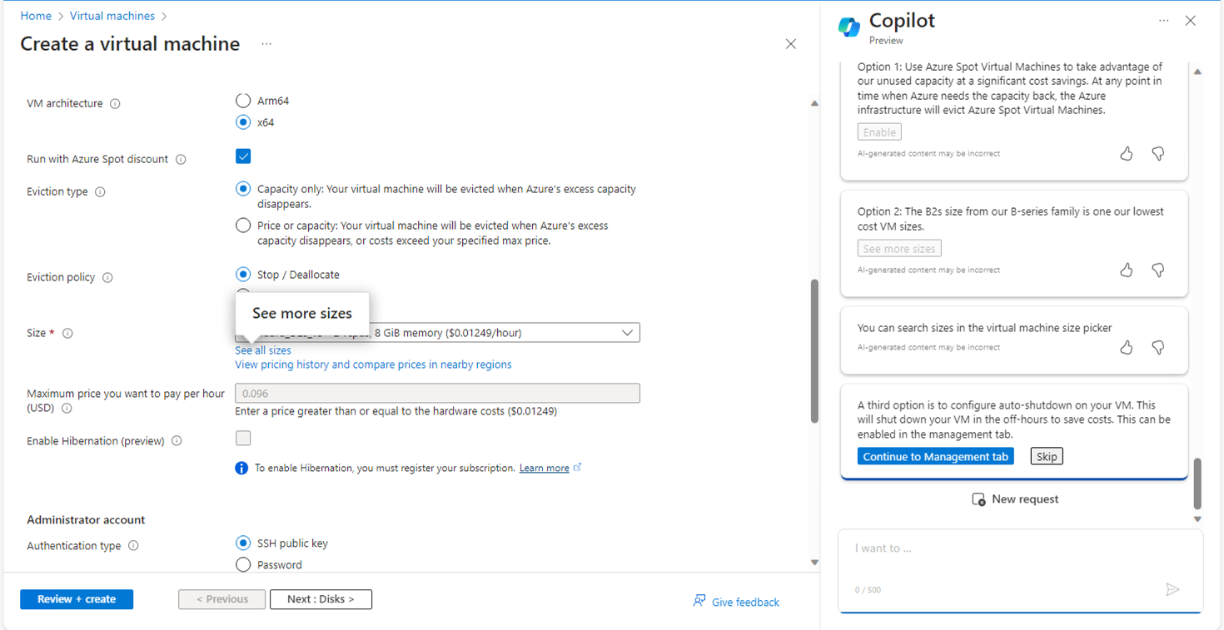

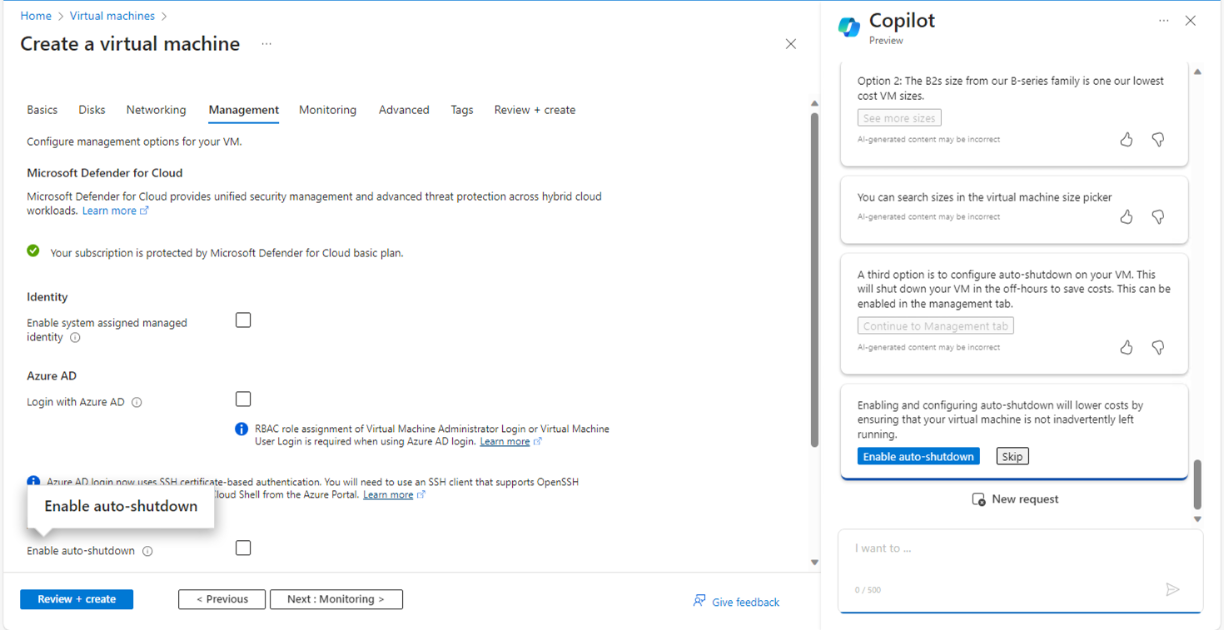

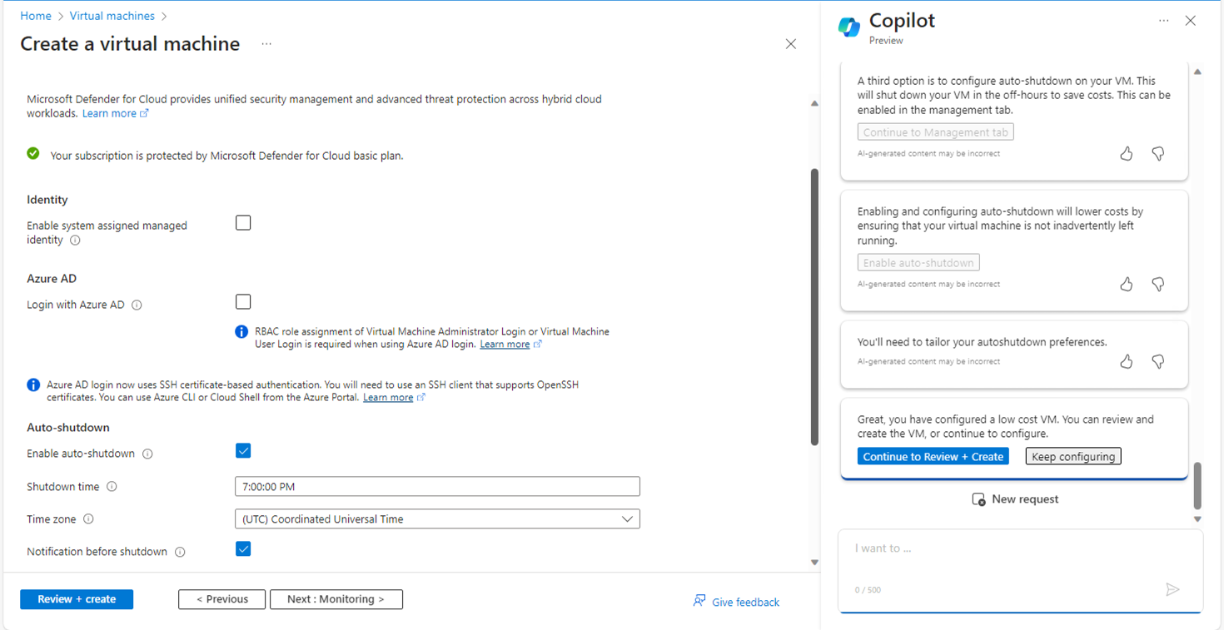

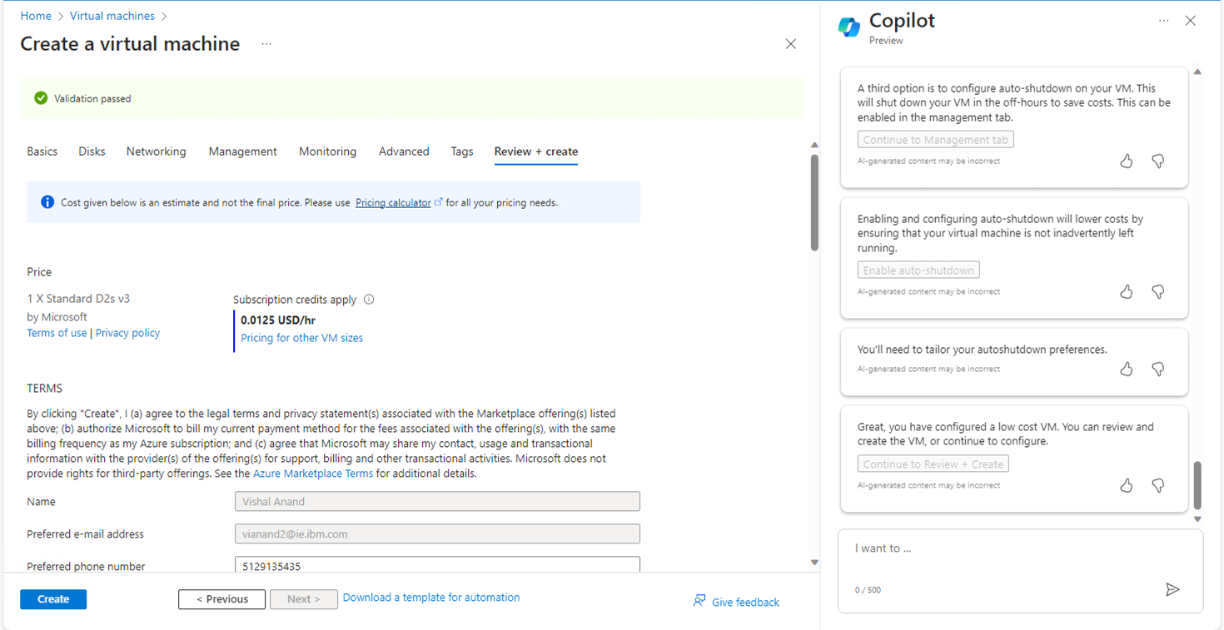

Next steps are quite more interesting. It was a real augmented experience. Copilot (digital worker) and I (human worker) worked together. I asked to help me create a low cost VM and it executed required steps one by one for the given context and gave me options to enable or skip those features. From Fig 5, to Fig 11 it executed all required steps, allowed me to check the right options and so on.

And here, we came to the step of final creation of VM as shown in Fig. 11.

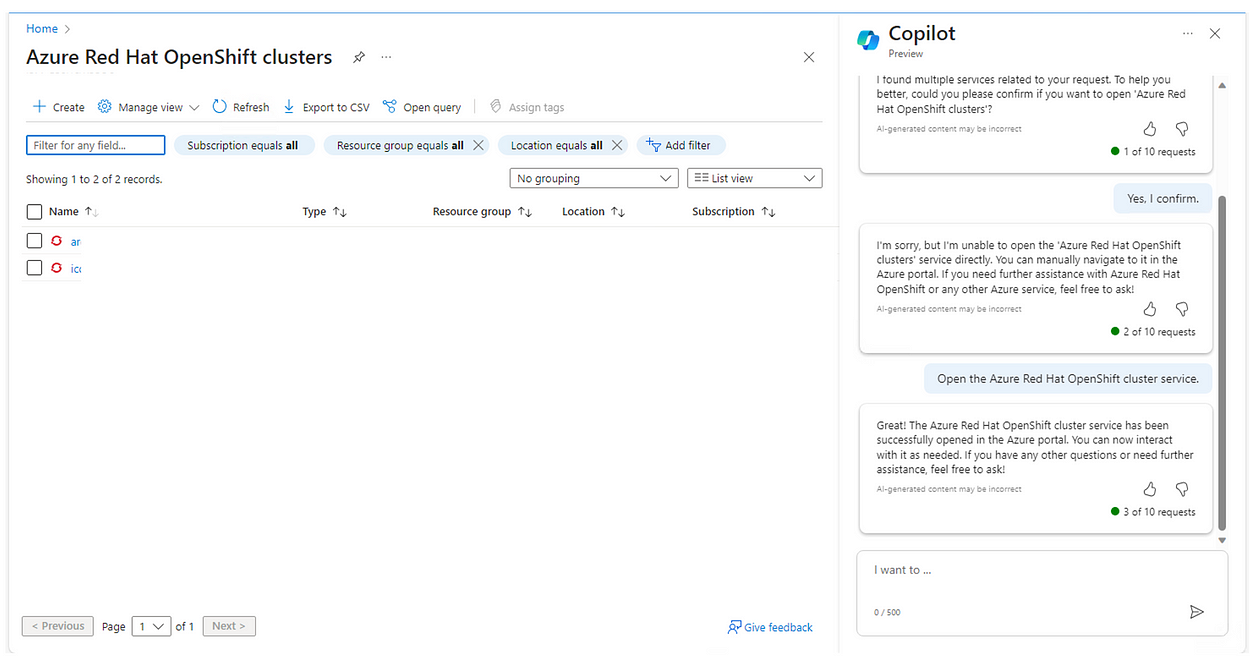

In the next step, I wanted Copilot to open the ARO cluster service section. It opened it up in my second attempt as show in Fig. 12.

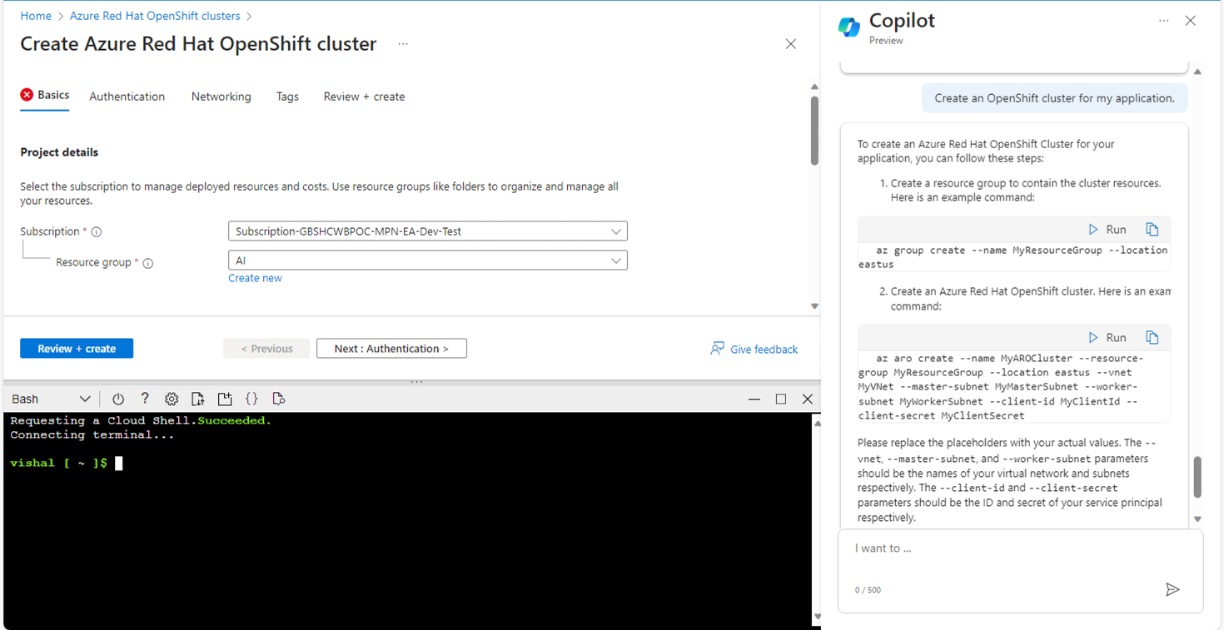

Further, I asked Copilot to create a cluster. Here it gave me the required commands and steps with Run option. When I clicked on Run, it opened the command shell expecting me to run the commands as shown in Fig. 13.

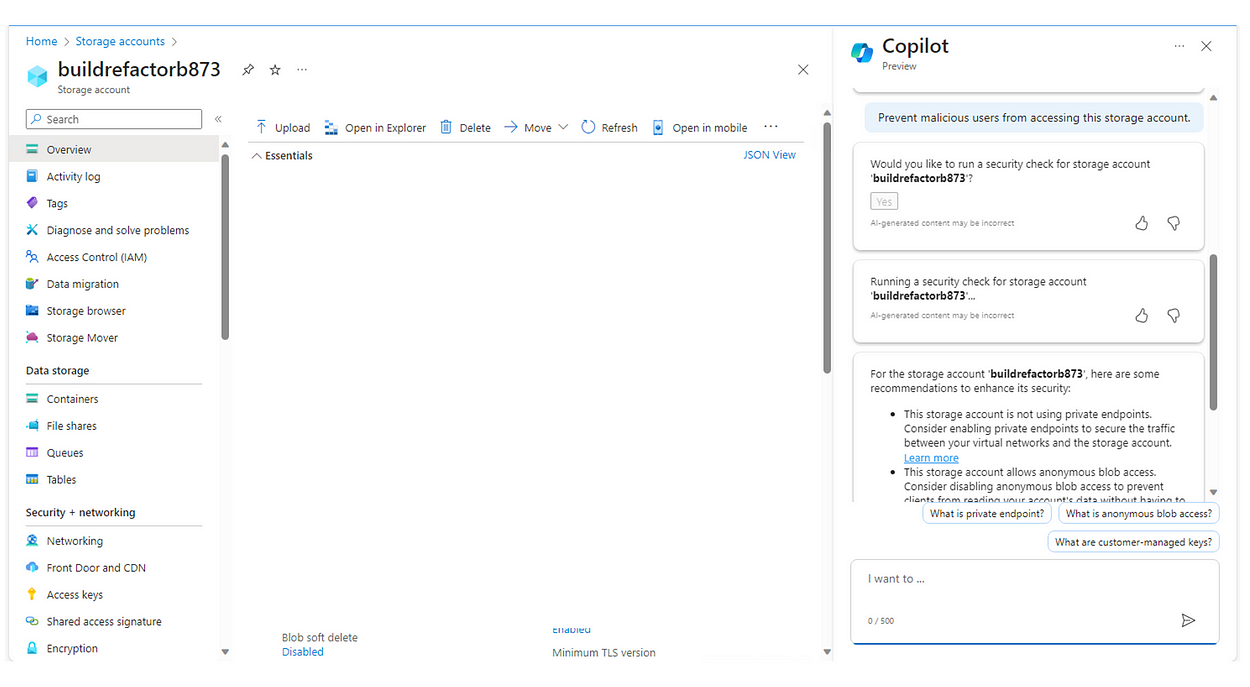

Then, I wanted to validate if there any security weakness related to a storage account (in test environment, of course).

It asked me if I would like Copilot to run security checks. I clicked on Yes, and it ran the security checks for the particular storage account and listed those weaknesses straight away as shown in Fig. 14.

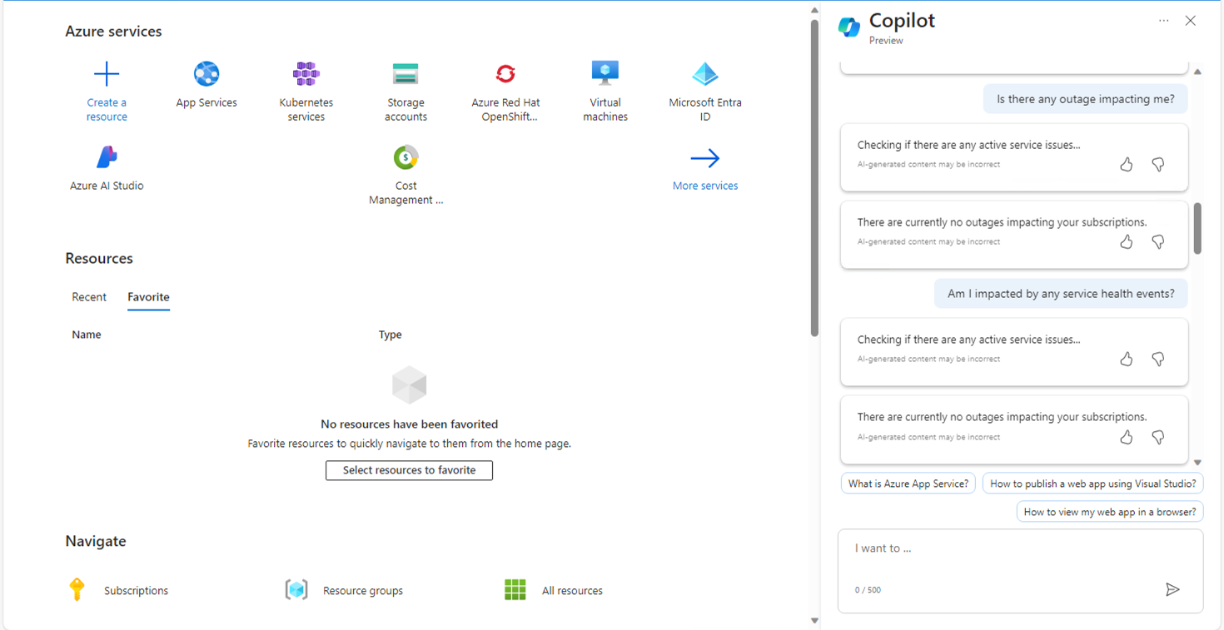

Here, I wanted to find out the Service Health of all resources that belong to my tenant. As you see below in Fig. 15, I input specific prompts realted to outage, impact, health events etc. It reported the correct status on those.

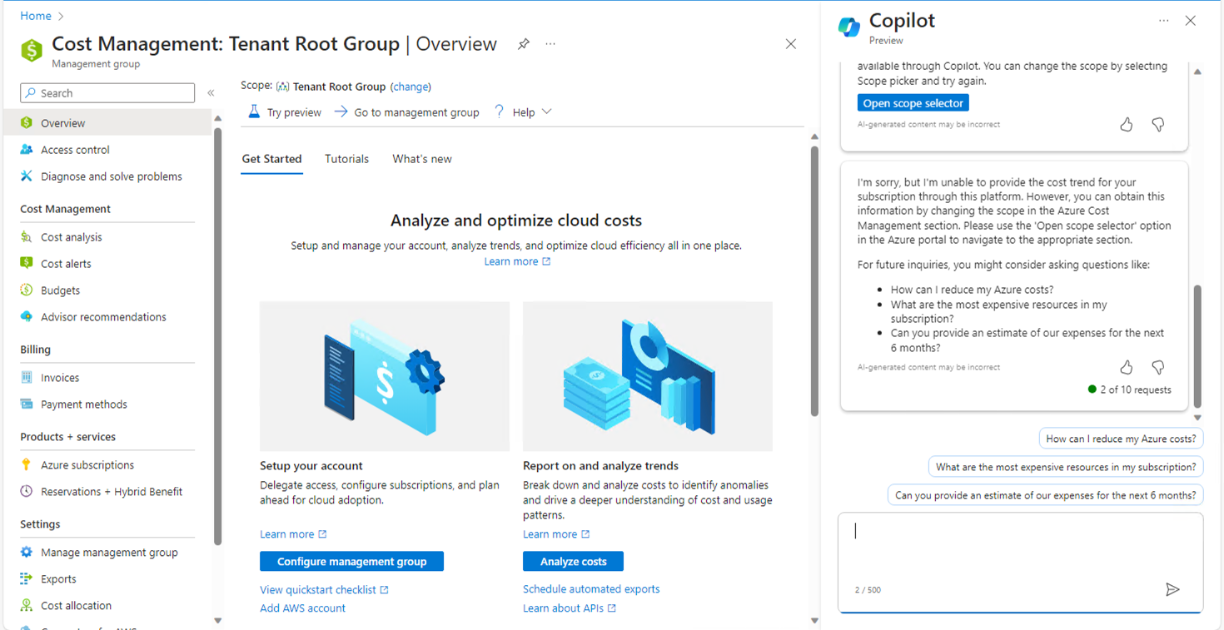

In Fig.16, I learned that it did not provide any cost insights, forecast, trend etc. at the moment whereas It should have, as per the theory. I will retry. I am also sure a lot many features will be added over time. I would like to ask about any sudden cost spike with any resource during the week (will do tomorrow).

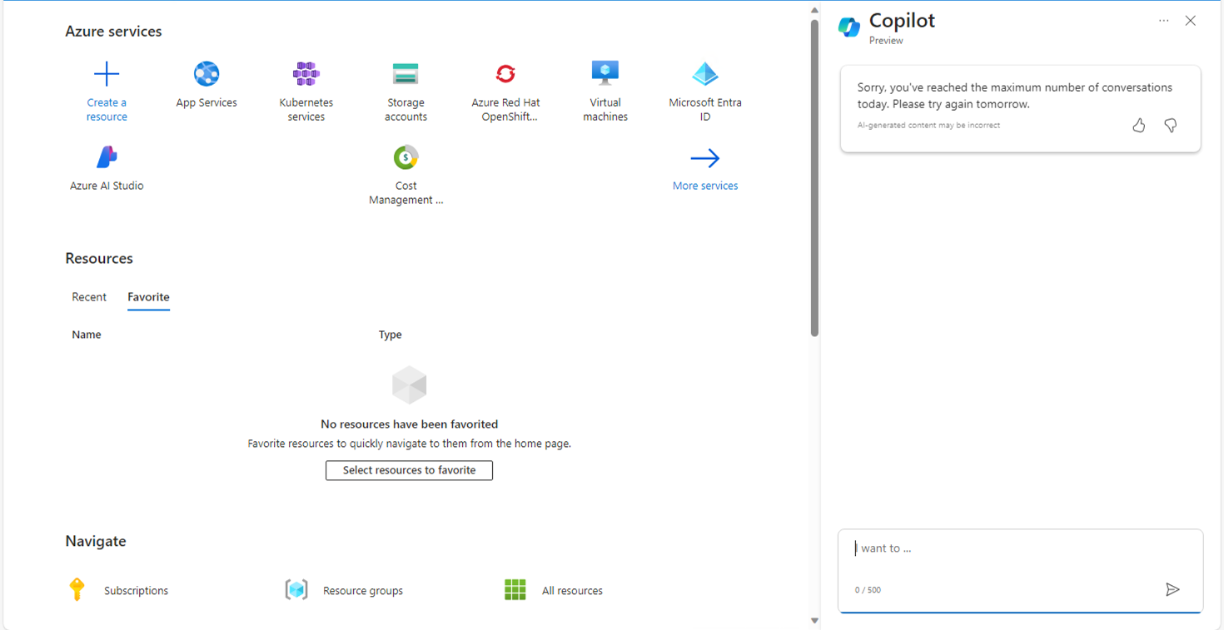

Finally, it seems I ran out of the limit for my conversations with Copilot for the day as shown below.

In fact, the email I received did state ‘Microsoft Copilot for Azure is experiencing an overwhelming demand from Microsoft customers. As a result, we have implemented conversation and turn limits to ensure that we maintain sufficient capacity to all customers enabled with this feature.’

So, I will talk to my Copilot for My Azure Resources tomorrow evening.

Conclusion:

- Overall, it does demonstrate a promise of AI-augmented cloud operations.

- In public preview phase, it allows to execute a lot in terms of insights, service health, deployments, recommendations, steps, commands etc.

- It performs output completions within the admin’s/user’s subscription or tenant id (rightly so).

- Era has arrived where Human worker and Digital worker can collaborate in real-time to add value to cloud operations.

- It is currently not recommended for production workloads (obviously it is in preview phase). So, we will have to wait (can we?) for its General Availability.

Disclaimer: Personal views, personal hands-on, personal understanding.

Please provide feedback and claps (if you like it). Knowledge shared is knowledge earned, so please share the article with like minded readers.

Thank you as always,