Azure Pipelines, part of the Azure DevOps suite, is our Continuous Integration and Continuous Delivery (CI and CD) platform, used every day by large enterprises, individual developers, and open source projects. Today, we’re thrilled to announce new features for Azure Pipelines, including some much-requested ones:

- Multi-stage YAML pipelines (for CI and CD)

- Environments and deployment strategies

- Kubernetes support

Multi-stage YAML pipelines

One of our biggest customer requests since launching YAML support for Build pipelines (CI) has been to support it for Release pipelines (CD) as well. To accomplish this, we now offer a unified YAML experience, so you can configure each of your pipelines to do CI, CD, or CI and CD together. Defining your pipelines using YAML documents allows you to check the configuration of your CI/CD into source control together with your application’s code, for easy management, versioning, and control.

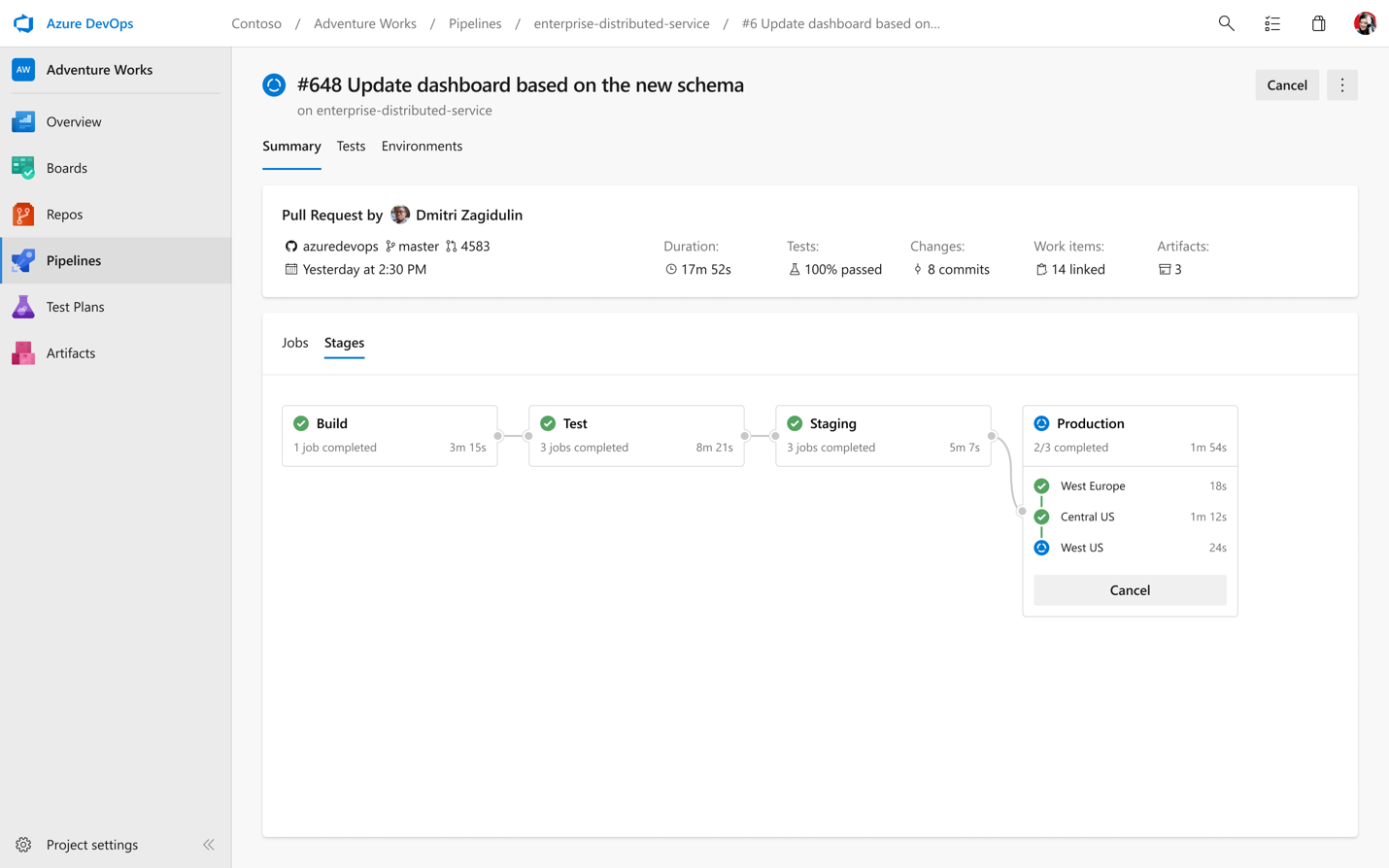

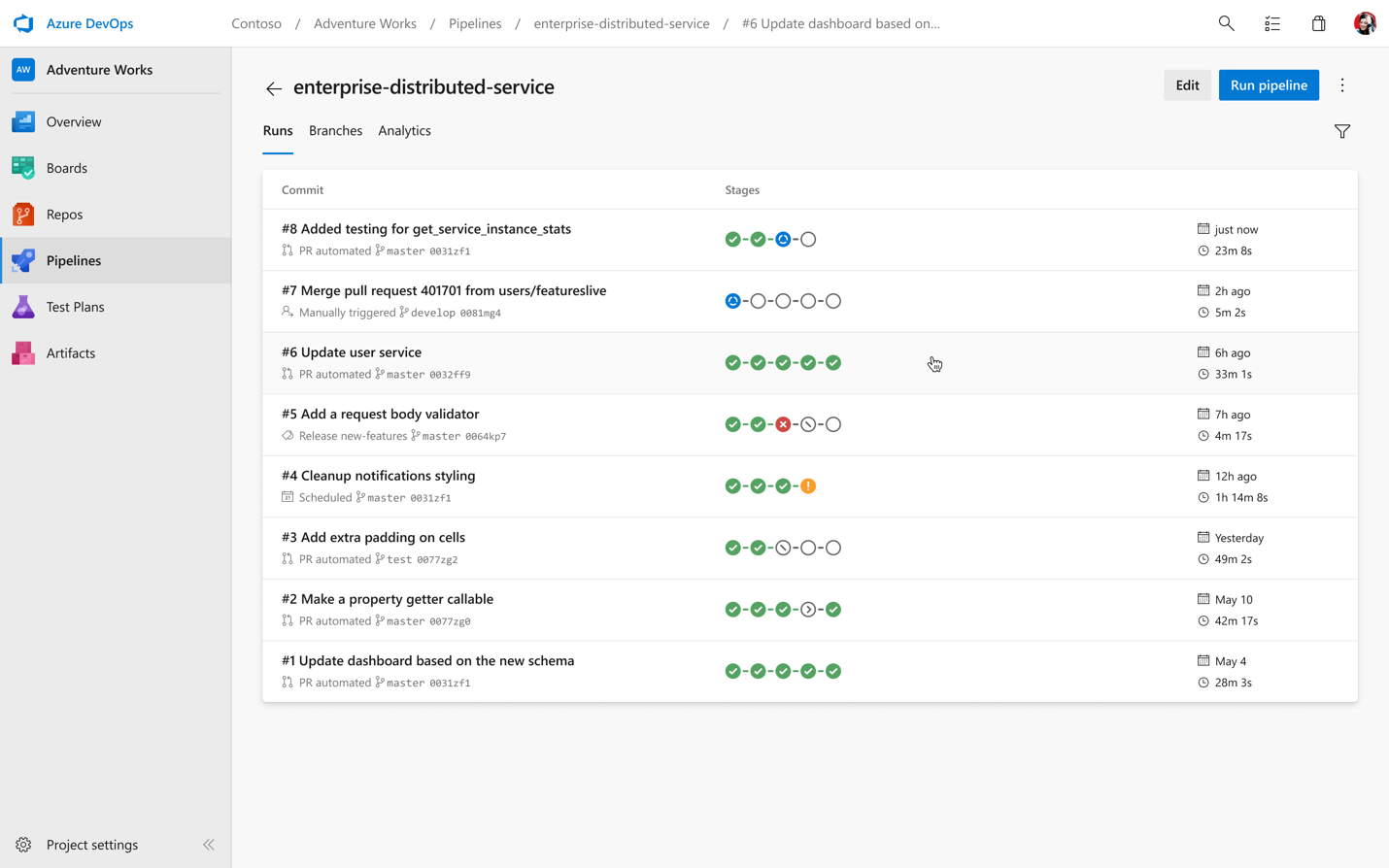

With our new YAML support, we’re also bringing a new UI to help visualize all of your multi-stage pipelines across the product, whether you’re in the run summary view, looking at all your pipeline runs, or browsing logs.

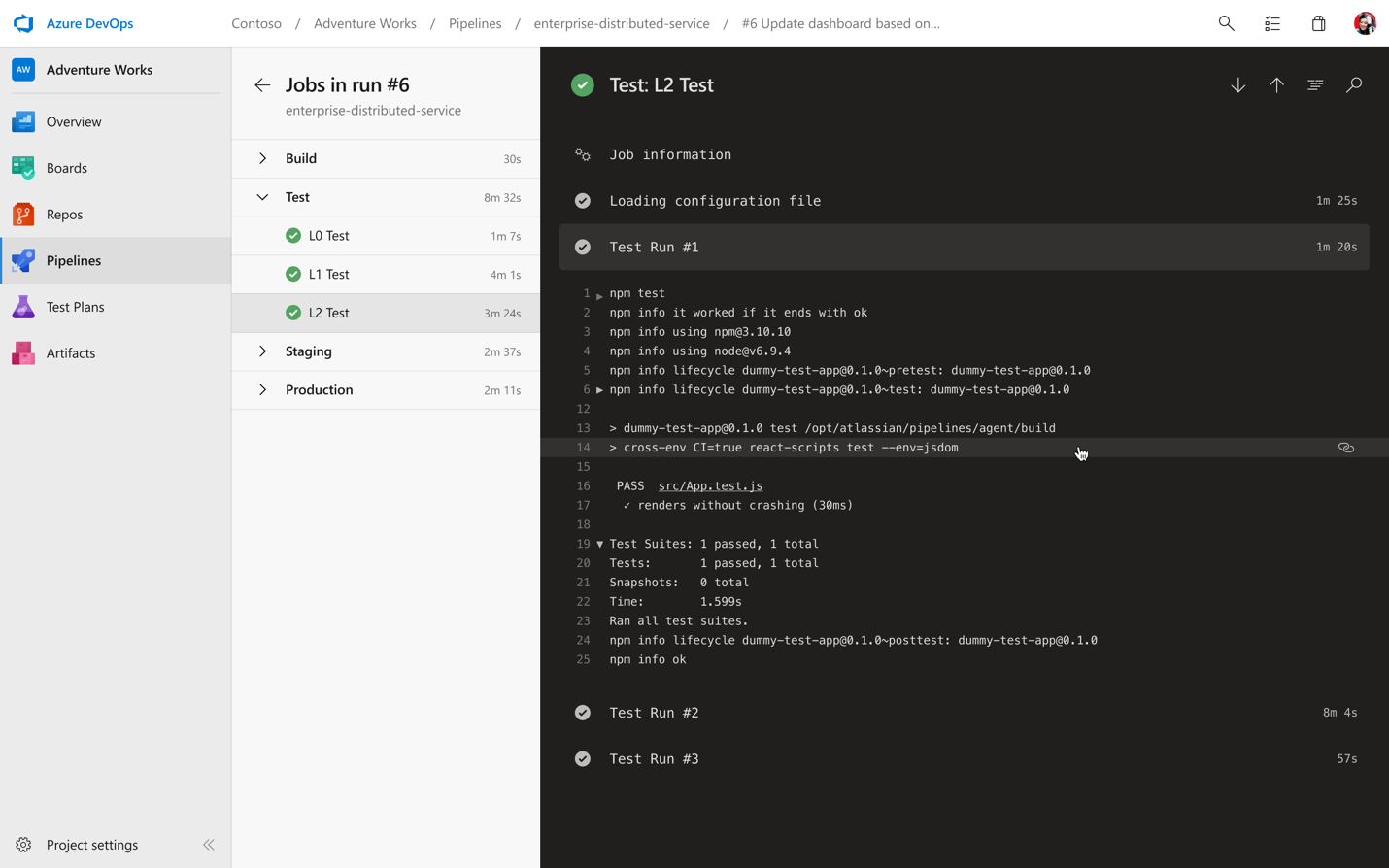

In addition to our new pipelines pages, we have a new log viewing experience as well. This lets you easily jump between stages and jobs along with helping you quickly identify errors and warnings.

This feature will be rolled out for all accounts over the next few days. To enable it, go to the preview features page and turn on the toggle for “Multi-stage pipelines”.

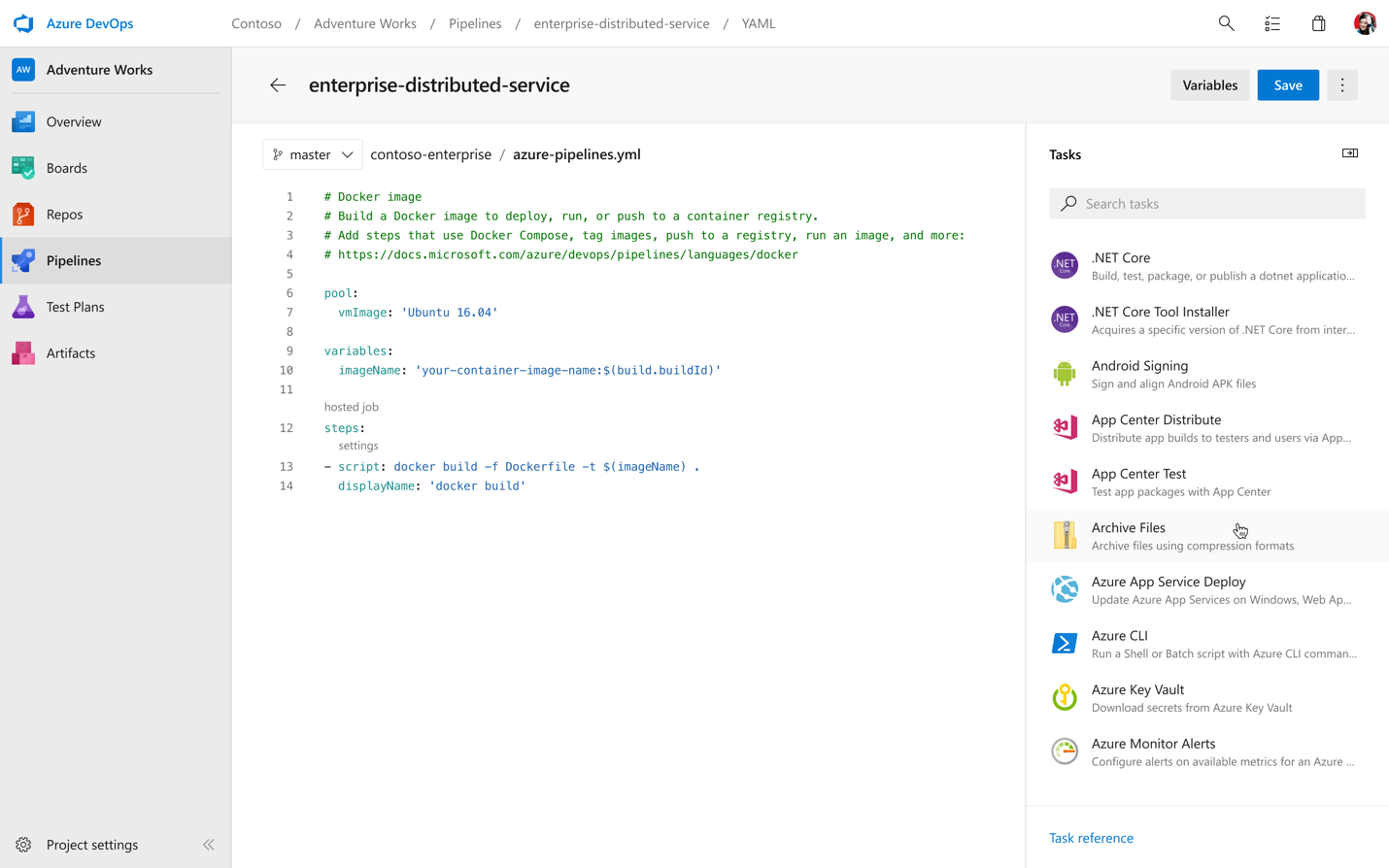

Getting going with YAML

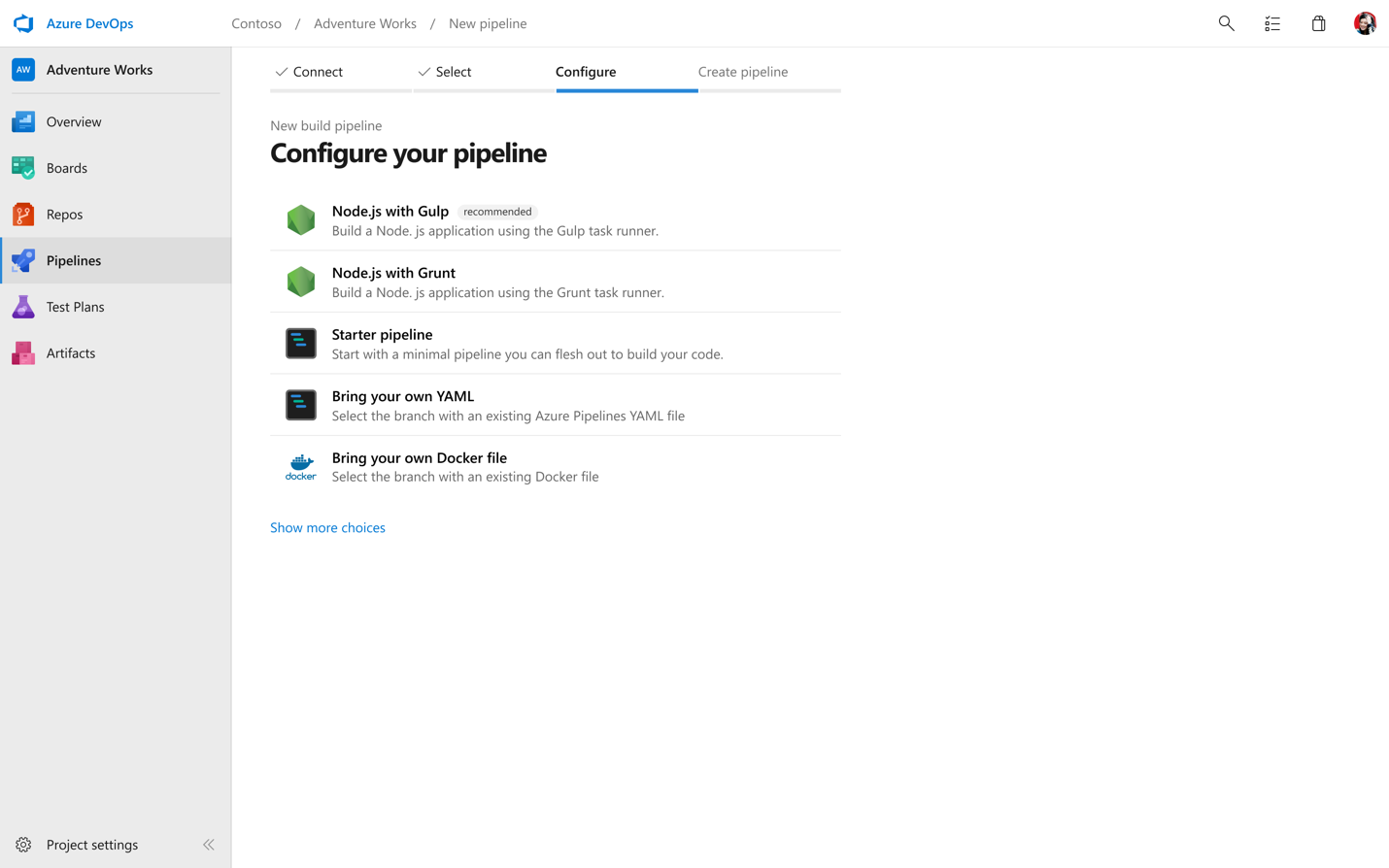

We want you to be able to get going fast wherever your code lives. Once you connect your repo, whether it’s on GitHub, Azure Repos, or your own external Git source, we’ll analyze your code and recommend a YAML template that makes sense for you and gets you up and running quickly.

While we want to get you running quickly, we know you’re going to want to keep configuring and updating your YAML. To help make it even easier to edit and update your pipeline, we’ve created an in-product editor with IntelliSense smart code completion, and an easy task assistant.

Building your first multi-stage pipeline with environments

Bringing CD to YAML means a bunch of great additions in terms of commands and functionality. Let’s cover the basics with a simple pipeline that just builds and deploys an app in two stages.

stages:

- stage: Build

jobs:

- job: Build

pool:

vmImage: 'Ubuntu-16.04'

continueOnError: true

steps:

- script: echo my first build job

- stage: Deploy

jobs:

# track deployments on the environment

- deployment: DeployWeb

pool:

vmImage: 'Ubuntu-16.04'

# creates an environment if it doesn’t exist

environment: 'smarthotel-dev'

strategy:

# default deployment strategy

runOnce:

deploy:

steps:

- script: echo my first deploymentIf we ran this pipeline, it would execute a first stage, Build, followed by a second stage, Deploy. You are free to create as many stages as you wish, for example to deploy to staging and pre-production environments.

You may notice two new interesting concepts in here if you’re familiar with our YAML schema. And if this is the first time you’re seeing our YAML, you can read up on the core concepts here.

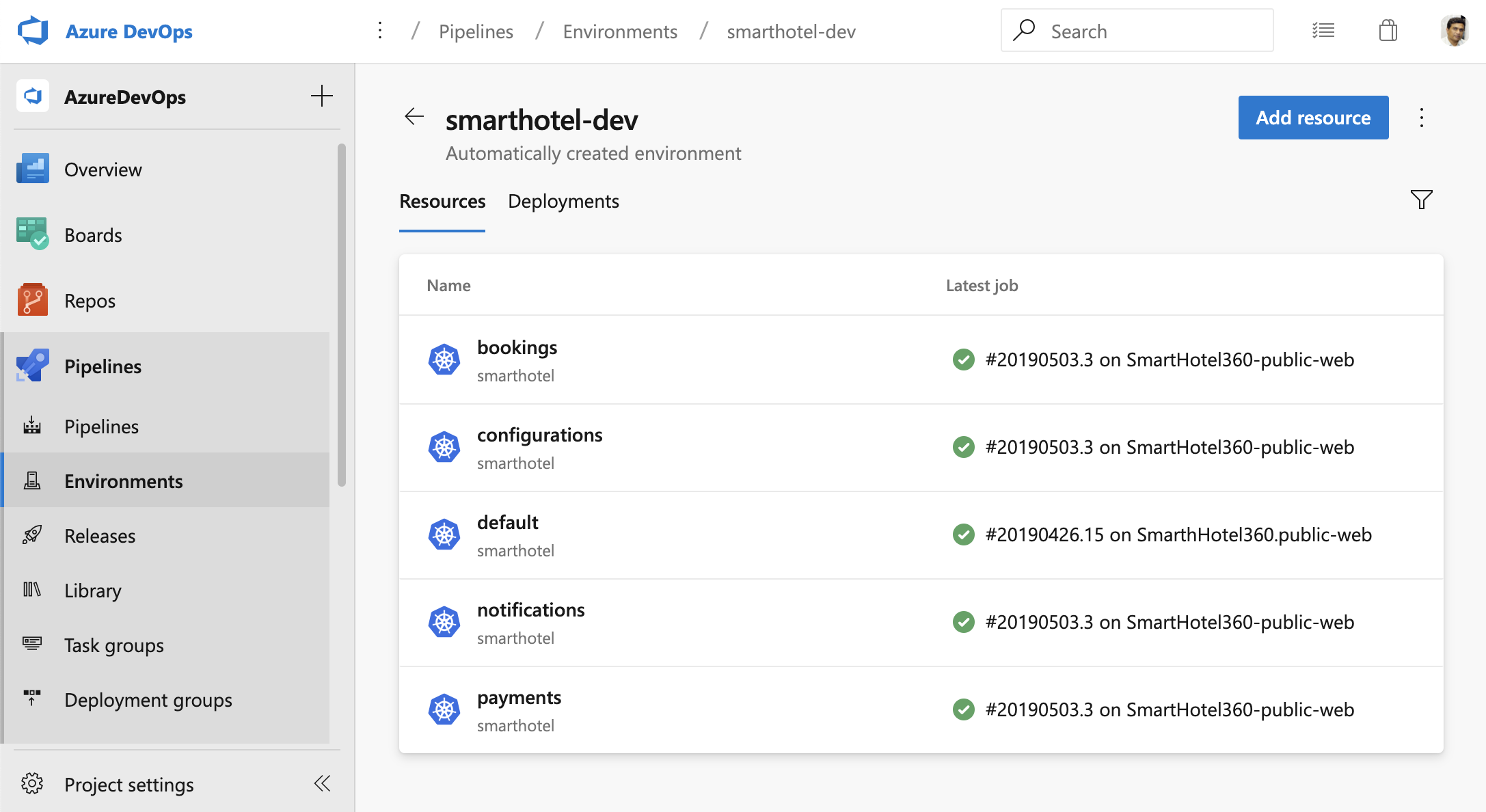

The first new keyword is environment. Environments represent the group of resources targeted by a pipeline, for example, Kubernetes clusters, Azure Web Apps, virtual machines, and databases. Environments are useful to group resources, for example under “development”, “staging”, “production”, etc, and you can define them freely. Defining and using an environment unlocks all kinds of capabilities, for example:

- Traceability of commits and work items

- Deployment history down to the individual resource

- Deeper diagnostics, and (soon) approvals and checks

There’s a lot of great new functionality available today in preview, and even more coming around the corner. You can learn more on environments here

You will also notice the strategy keyword. This impacts the deployment strategy, which defines how your application is rolled out across the cluster. The default strategy is runOnce, but in the future you’ll be able to easily indicate other strategies, such as canary or blue-green.

If you’re ready to start building, check out our documentation for building a multi-stage pipeline with environments. If you want to see some multi-stage pipeline templates to work off of, take a look at out our templates repo. You can even see those sample pipelines in action inside of our samples project.

Kubernetes

If you have an app which has been containerized (ie. there is a Dockerfile in the repository), we want to make it easy for you to setup a pipeline in less than 2 minutes, to build and deploy to a Kubernetes cluster (including Azure Kubernetes Service). Wrapping your head around Kubernetes can be hard, so we’re making it easy to both get started and continue deploying to your Kubernetes clusters. For more details, read our post on Azure Pipelines and Kubernetes.

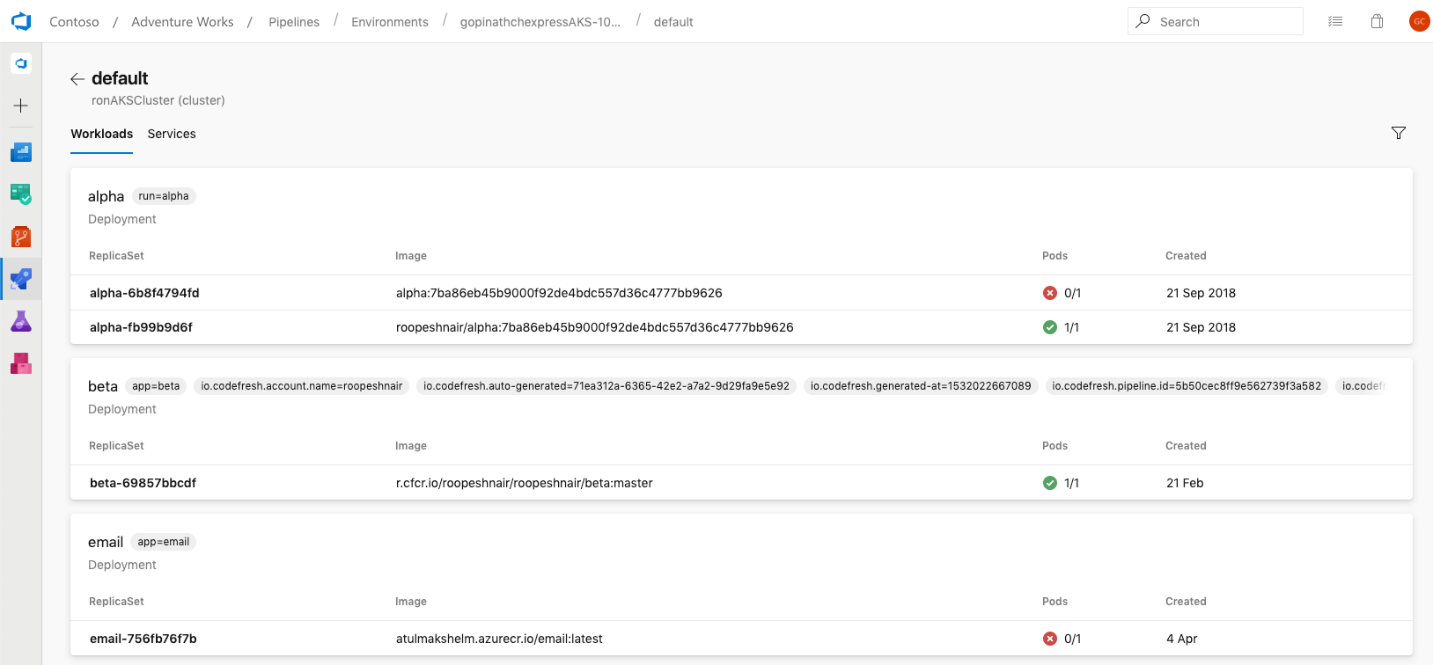

Kubernetes is fully integrated with Azure Pipelines environments too. This lets you view all the deployments, daemonsets, etc, running on Kubernetes in each environment, completed by insights such as readiness and liveness probes of pods. You can use this information and pod-level details (including logs, containers running inside pods, and image metadata) to effectively diagnose and debug any issue, without requiring direct access to the cluster itself.

A look at what’s next

In addition to the preview features that now available, there are so many exciting things just around the corner for Azure Pipelines we want to share:

- Caching – We’ll be announcing the availability of another much-requested feature very shortly: caching to help your builds run even faster.

- Checks and approvals – We’re improving multi-stage pipelines with the ability to set approvals on your environments, to help control what gets deployed when and where. We’ll keep iterating here to deliver more experiences with checks to help gating your multi-stage pipelines.

- Deployment strategies – We’re adding additional deployment strategies as part of the deployment job type, like blue-green, canary and rolling, to better control how your applications are deployed across distributed systems.

- Environments – We’re adding support for additional resource types in environments, so you can get going quickly with virtual machines (through deployment groups) and Azure Web Apps.

- Mobile – With our new UX, we’re going to start to enable new mobile views in Q2 to help you view the status of pipelines, quickly jump into logs, and complete approvals.

- Pipeline analytics – We’re continuing to grow our pipeline analytics experiences to help you get an all-up picture of the health of your pipelines, so you can know where to go in and dig deeper.

- Tests and code coverage – We’re going to be shipping all new test and code coverage features and UX in the next months.

Thank you

Lastly, we want to thank all of our users who have adopted Azure Pipelines. Since our launch last September, we have seen tremendous growth, and we are particularly excited about the response from the open source developer community. It’s been an honor to see Azure Pipelines badges on so many open sources projects we love and use regularly ourselves. In the first eight months, public repositories have already used over 88 years of build time for Azure Pipelines for free. Check out Martin’s post for some more stats and stories from open source projects. We’ve also received so much great feedback from project maintainers to date and we can’t thank the community enough.

If you’re new to Azure Pipelines, get started for free on our website and learn more about what you can do with Azure Pipelines.

We’ll be posting more deep-dive information in the coming weeks, but as always we’d love to hear your feedback and comments. Feel free to comment on this post or tweet at @AzureDevOps with your thoughts.