In this article we will look at application design and how cache design will be helping to get data from back end quickly. scope of this article is to look in to general use cases and the best practices available.

There could be lot of specific scenario and good solutions but customization will be based on some standard practices. Cache at different levels will be starting point for this topic. When data cached at the client side , browser side, servicer side or even DB side all different scenarios will be suitable for different application/ functionality.

- Web server caching frequently requested web pages.

- Database Query Caching: Caching the results of frequently executed database queries to avoid repetitive database hits.

- API Response Caching: Caching the responses from external APIs to reduce network round trips and improve response times.

- Web Server Caching: Web servers like Nginx and Apache can cache static and dynamic content to serve requests faster.

- Browser Caching: Your web browser caches resources (images, CSS, JS) to speed up subsequent visits to websites.

- DNS Caching: DNS resolvers cache domain name to IP address mappings to accelerate website lookups.

In this article I will also go though complete details of API caching from graphql and few other available solutions.

Technologies used in cache design:

Client-side caching is a technique used to store data locally on the client’s device to improve the performance and efficiency of web applications

Server-side caching is a technique used to store copies of data on the server to improve response times and reduce the load on back-end systems. By keeping frequently accessed data in a cache, servers can quickly serve requests without needing to repeatedly query a database or perform complex computations.

Lotof legacy systems used Akamai to cheche content and it was very good solution for application of large scale as Akamai has edge network around the world and cache servers are quite powerful. so it all started especially images or some of the key web data akamaized and delivered to web sites lot.

There are many companies made similar products some of them are based on edge network infrastructure and some are cloud based . Here are few examples

Content Delivery Network (CDN) Caching Techniques

To implement CDN caching, website owners can use the following solutions:

- Cloud-based CDNs: Services like Amazon CloudFront, Google Cloud CDN, and Cloudflare offer CDN solutions that can be easily integrated with the website.

- Self-hosted CDNs: Website owners can also set up their own CDN infrastructure using open-source solutions like Varnish Cache or Nginx.

Dynamic Caching Techniques

To implement dynamic caching, website owners can use the following solutions:

- Varnish Cache: Varnish Cache can be used to cache the output of dynamic scripts and serve them directly from the cache.

- WordPress Caching Plugins: WordPress has a range of caching plugins, such as WP Rocket, W3 Total Cache, and WP Super Cache, that can help with dynamic caching.

- Custom Caching Mechanisms: Website owners can also build their own custom caching mechanisms using in-memory data stores like Memcached or Redis.

Above techniques re more depending on third party infrastructure to scale up the application instead of fine tuning application using in build cache techniques. Again inbuild cache can help to some extent and after that either it should be hardware scaling which is expensive so alternative should be above mentioned third party vendors software solutions.

Some of the use cases just plugging in third party will just solve the performance and scalability. Eg in my web site i do read content i.e images from Choudary. I can still deliver from azure blog and rendering on page. But cloud nary already make sure these images are loaded faster and easy to adopt. No additional coding required to achieve this.

Technical challenge:

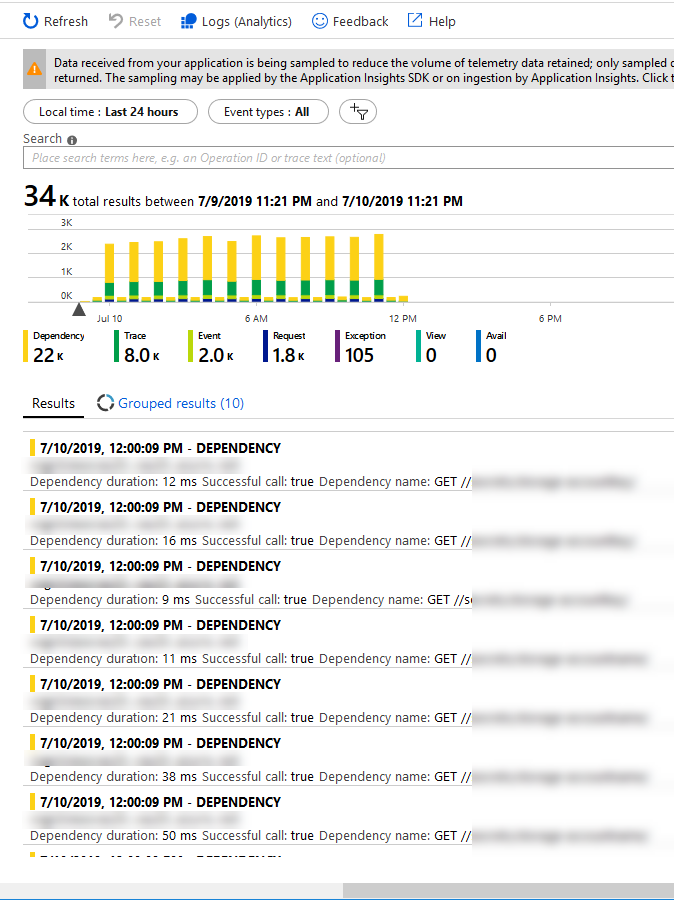

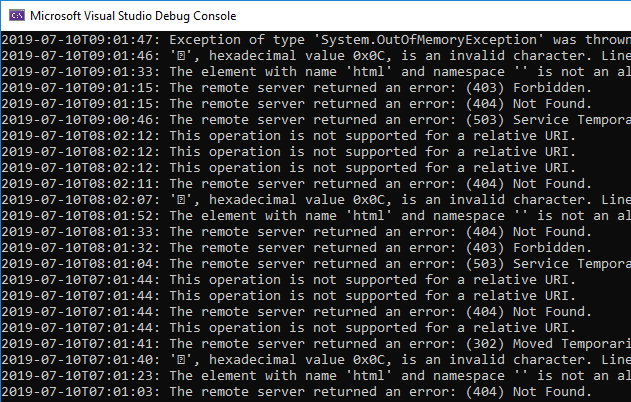

Initial design is get data from azure blob . Which is big slow as www.talash.azurewebsites.net display 100 images on landing page. These images will change for each request. API is coming from Graphql end point.

Graphql request cached. But once cache expires it takes 19 seconds to get images urls and get meta data for images like likes, downloads etc. On top of design should address real time showing likes for images i.e updating this data if other users browsing and done some thing like downloaded or commented. so if we request images every time it is 19 sec loading time. If we cache request data and still if we want to show user actions real time make tis design bit tricky.

Solution:

1. Signal R solved problem of real time updates. works perfect if two users brow same content and do any user action will reflect on other user pages.

2. Graphql api cached at server level. so fetching time reduced. If it is ne fetch it is 19 sec otherwise it is .5 ms. Appollo client used at client side to get more control on cache .

.3 On expansion of tool bars which basically show image information like clicks and download numbers latest data will be fetched. This section made collapsable so that only if user interested will get latest data otherwise data will be same when page loaded. this will avoid every user click for all images to all concurrent users.

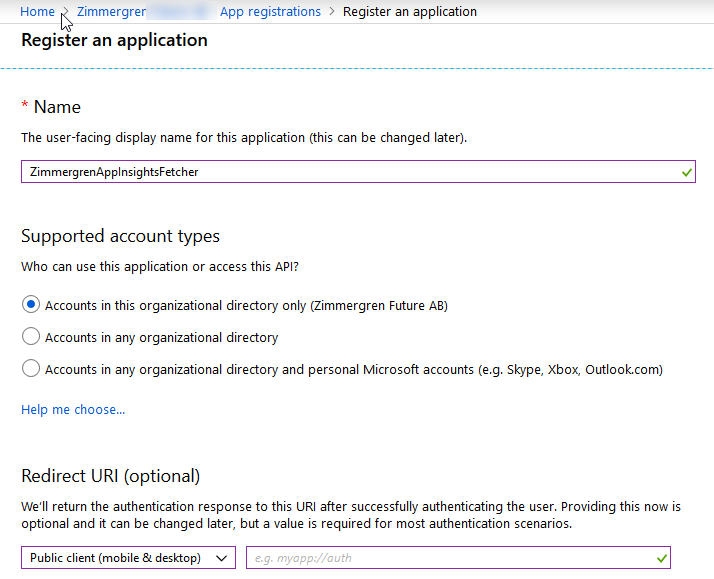

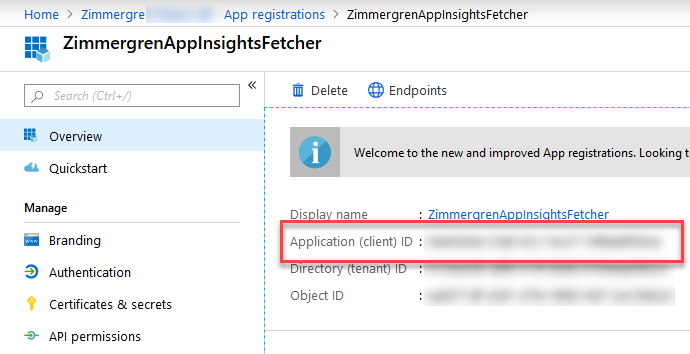

4. To reduce 19 sec after cache expiry, some work around solution made. As there is over head with "Cold Start" of the some azure resource which is around 5 to 8 sec , implemented an function app which runs every 5 min basically it will reduce the

any latency related to "Cold start" in azure.

Enhancements:

1. Load images query should get new data in every 5 min and fetch should be cached and also it should avoid " stampede" situation where cache expiration should not cause bad user experience to multiple concurrent users.

2. "User Query" like data fetching and refetching mechanism to get image information and refetch modified information . It should handle mutation situation carefully.

3. Looking at scalability aspects like if application restarts or latency related to "cold start" with cloud infrastructure and some features of distributed cache using raidis or other external cache systems.

So finally can get all images with their updated information real time still able to cache content and achieve 20ms loading tie for my landing page. The solution can be further fine tuned. Any mutation can expire meta data and then send to concurrent users as notifications. But above design address lot of performance issues using cache at the same time avoiding any data integrity issues.

We can look at more Use cases and design and solutions. Above example is to understand different dimensions of caching. Caching support will be different for different languages and technologies. Here are few good examples for Node js,

react and few other technologies.

Node Js:

React:

dotnet:

Angularl:

- Caching Strategy: Cache-Aside and In-Memory Caching

- Problem: Twitter deals with massive amounts of data, with millions of tweets being read and written every second. The need to quickly serve user timelines and handle the high read/write throughput is critical.

- Solution: Twitter uses Memcached, an in-memory caching system, to store timelines and user sessions. By caching the results of expensive database queries, Twitter can serve user requests more quickly.

- Benefits: This reduces the load on the primary database, speeds up data retrieval, and enhances the overall user experience.

2. Netflix

- Caching Strategy: Distributed Caching and Write-Through Caching

- Problem: Netflix needs to deliver video content to millions of users worldwide with minimal latency and high reliability.

- Solution: Netflix uses an open-source tool called EVCache, which is based on Memcached, to cache metadata and frequently accessed data. This distributed caching system spans multiple data centers to ensure data availability and quick access.

- Benefits: This strategy allows Netflix to serve content recommendations, user data, and other API responses quickly, ensuring a seamless viewing experience even during peak times.

3. Amazon

- Caching Strategy: Content Delivery Network (CDN) Caching and Cache-Aside

- Problem: Amazon's e-commerce platform handles an immense volume of product queries, user sessions, and transactional data.

- Solution: Amazon uses Amazon CloudFront, a CDN, to cache static assets like images, videos, and CSS files at edge locations closer to users. Additionally, they employ DynamoDB with DAX (DynamoDB Accelerator) to provide fast in-memory acceleration for read-heavy workloads.

- Benefits: This reduces latency, speeds up data access, and decreases the load on backend systems, ensuring a fast and reliable shopping experience.